Lecture material weeks 1-15

.pdf

LECTURE 1. NUMBER SYSTEMS

Decimal System

Most people today use decimal representation to count. In the decimal system there are 10 digits:

0, 1, 2, 3, 4, 5, 6, 7, 8, 9

These digits can represent any value, for example:

754.

The value is formed by the sum of each digit, multiplied by the base (in this case it is 10 because there are 10 digits in decimal system) in power of digit position (counting from zero):

Position of each digit is very important! for example if you place "7" to the end:

547

it will be another value:

Important note: any number in power of zero is 1, even zero in power of zero is 1:

Binary System

Computers are not as smart as humans are (or not yet), it's easy to make an electronic machine with two states: on and off, or 1 and 0.

Computers use binary system, binary system uses 2 digits:

0, 1

And thus the base is 2.

Each digit in a binary number is called a BIT, 4 bits form a NIBBLE, 8 bits form a BYTE, two bytes form a WORD, two words form a DOUBLE WORD (rarely used):

There is a convention to add "b" in the end of a binary number, this way we can determine that 101b is a binary number with decimal value of 5.

The binary number 10100101b equals to decimal value of 165:

Hexadecimal System

Hexadecimal System uses 16 digits:

0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F

And thus the base is 16.

Hexadecimal numbers are compact and easy to read.

It is very easy to convert numbers from binary system to hexadecimal system and vice-versa, every nibble (4 bits) can be converted to a hexadecimal digit using this table:

Decimal Binary Hexadecimal (base 10) (base 2) (base 16)

0 |

|

0000 |

|

0 |

|

|

|

|

|

1 |

|

0001 |

|

1 |

|

|

|

||

2 |

|

0010 |

|

2 |

|

|

|

|

|

3 |

|

0011 |

|

3 |

|

|

|

||

4 |

|

0100 |

|

4 |

|

|

|

|

|

5 |

|

0101 |

|

5 |

|

|

|

||

6 |

|

0110 |

|

6 |

|

|

|

||

7 |

|

0111 |

|

7 |

|

|

|

||

8 |

|

1000 |

|

8 |

|

|

|

||

9 |

|

1001 |

|

9 |

|

|

|

|

|

10 |

|

1010 |

|

A |

|

|

|

|

|

11 |

|

1011 |

|

B |

|

|

|

|

|

12 |

|

1100 |

|

C |

|

|

|

|

|

13 |

|

1101 |

|

D |

|

|

|

|

|

14 |

|

1110 |

|

E |

|

|

|

|

|

15 |

|

1111 |

|

F |

There is a convention to add "h" in the end of a hexadecimal number, this way we can determine that 5Fh is a hexadecimal number with decimal value of 95.

We also add "0" (zero) in the beginning of hexadecimal numbers that begin with a letter (A..F), for example 0E120h.

The hexadecimal number 1234h is equal to decimal value of 4660:

Converting from Decimal System to Any Other

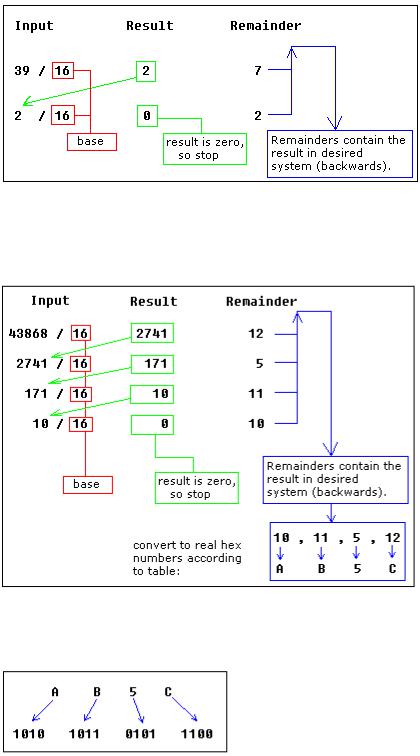

In order to convert from decimal system, to any other system, it is required to divide the decimal value by the base of the desired system, each time you should remember the result and keep the remainder, the divide process continues until the result is zero.

The remainders are then used to represent a value in that system.

Let's convert the value of 39 (base 10) to Hexadecimal System (base 16):

As you see we got this hexadecimal number: 27h.

All remainders were below 10 in the above example, so we do not use any letters. Here is another more complex example:

let's convert decimal number 43868 to hexadecimal form:

The result is 0AB5Ch, we are using the above table to convert remainders over 9 to corresponding letters.

Using the same principle we can convert to binary form (using 2 as the divider), or convert to hexadecimal number, and then convert it to binary number using the above table:

As you see we got this binary number: 1010101101011100b

Signed Numbers

There is no way to say for sure whether the hexadecimal byte 0FFh is positive or negative, it can represent both decimal value "255" and "- 1".

8 bits can be used to create 256 combinations (including zero), so we simply presume that

first 128 combinations (0..127) will represent positive numbers and next128 combinations (128..256) will represent negative numbers.

In order to get "- 5", we should subtract 5 from the number of combinations (256), so it we'll get: 256 - 5 = 251.

Using this complex way to represent negative numbers has some meaning, in math when you add "- 5" to "5" you should get zero.

This is what happens when processor adds two bytes 5 and 251, the result gets over 255, because of the overflow processor gets zero!

When combinations 128..256 are used the high bit is always 1, so this maybe used to determine the sign of a number.

The same principle is used for words (16 bit values), 16 bits create 65536 combinations, first 32768 combinations (0..32767) are used to represent positive numbers, and next 32768 combinations (32767..65535) represent negative numbers.

The Binary System

A pretty damn clear guide to a quite confusing concept by Christine R. Wright with some help from Samuel A. Rebelsky.

LECTURE 2. INTRODUCTION TO COMPUTER ARCHITECTURE

In computer science and engineering, computer architecture refers to specification of the relationship between different hardware components of a computer system.[1] It may also refer to the practical art of defining the structure and relationship of the subcomponents of a computer. As in the architecture of buildings, computer architecture can comprise many levels of information. The highest level of the definition conveys the concepts implement. Whereas in building architecture this over-view is normally visual, computer architecture is primarily logical, positing a conceptual system that serves a particular purpose. In both instances (building and computer), many levels of detail are required to completely specify a given implementation, and some of these details are often implied as common practice.

For example, at a high level, computer architecture is concerned with how the central processing unit (CPU) acts and how it accesses computer memory. Some currently (2011) fashionable computer architectures include cluster computing and Non-Uniform Memory Access.

From early days, computers have been used to design the next generation. Programs written in the proposed instruction language can be run on a current computer via emulation. At this stage, it is now commonplace for compiler designers to collaborate, suggesting improvements in the ISA. Modern simulators normally measure time in clock cycles, and give power consumption estimates in watts, or, especially for mobile systems, energy consumption in joules.

The art of computer architecture has three main subcategories:[2]

Instruction set architecture, or ISA. The ISA is the code that a central processor reads and acts upon. It is the machine language (or assembly language), including the instruction set, word size, memory address modes, processor registers, and address and data formats.

Microarchitecture, also known as Computer organization describes the data paths, data

processing elements and data storage elements, and describes how they should implement the ISA.[3] The size of a computer's CPU cache for instance, is an organizational issue that generally has nothing to do with the ISA.

System Design includes all of the other hardware components within a computing system. These include:

1.Data paths, such as computer buses and switches

2.Memory controllers and hierarchies

3.Data processing other than the CPU, such as direct memory access (DMA)

4.Miscellaneous issues such as virtualization or multiprocessing.

The coordination of abstract levels of a processor under changing forces, involving design, measurement and evaluation. It also includes the overall fundamental working principle of the internal logical structure of a computer system.

It can also be defined as the design of the task-performing part of computers, i.e. how various gates and transistors are interconnected and are caused to function per the instructions given by an assembly language programmer.

Computer organization

Computer organization helps optimize performance-based products. For example, software engineers need to know the processing ability of processors. They may need to optimize software in order to gain the most performance at the least expense. This can require quite detailed analysis of the computer organization. For example, in a multimedia decoder, the designers might need to arrange for most data to be processed in the fastest data path and the various components are assumed to be in place and task is to investigate the organisational structure to verify the computer parts operates.

Once instruction set and microarchitecture are described, a practical machine must be designed. This design process is called the implementation. Implementation is usually not considered architectural definition, but rather hardware design engineering. Implementation can be further broken down into several (not fully distinct) steps:

Central Processing Unit (CPU)

Also called the “chip” or “processor”

The brain of the computer

Major components:

Arithmetic Logic Unit (ALU)

calculator

Control unit

controls the calculator

Communication bus systems

Fetch-Execute Cycle

1.Fetch instruction from memory

2.Decode instruction in control unit

3.Execute instruction (data may be fetched from memory)

4.Store results if necessary

5.Repeat!

Registers

Temporary storage containers used inside the CPU

Extremely fast

Fixed size, usually multiples of 8-bits

Also called a “word”

Example: 32-bit machines (4-byte words)

How large is a word in a 64-bit machine?

Cache

Slower than registers

Faster than RAM

Located in front of main RAM

Different levels of cache

Level1 (L1) and Level2 (L2)

Size is usually around 1 MB

Memory Hierarchy

LECTURE 3. INTRODUCTION TO OPERATING SYSTEM

Operating system (commonly abbreviated to either OS or O/S) is an interface between hardware and user; it is responsible for the management and coordination of activities and the sharing of the limited resources of the computer. The operating system acts as a host for

applications that are run on the machine. As a host, one of the purposes of an operating system is to handle the details of the operation of the hardware. This relieves application programs from having to manage these details and makes it easier to write applications. Almost all computers, including handheld computers, desktop computers, supercomputers, and even video game consoles, use an operating system of some type. Some of the oldest models may however use an embedded operating system, that may be contained on a compact disk or other data storage device.

Common contemporary operating systems include Mac OS, Windows, Linux, BSD and Solaris. While servers generally run on Unix or Unix-like systems, embedded device markets are split amongst several operating systems.

The most important program that runs on a computer. Every general-purpose computer must have an operating system to run other programs. Operating systems perform basic tasks, such as recognizing input from the keyboard, sending output to the display screen, keeping track of files and directories on the disk, and controlling peripheral devices such as disk drives and printers.

Operating systems provide a software platform on top of which other programs, called application programs, can run. The application programs must be written to run on top of a particular operating system. Your choice of operating system, therefore, determines to a great extent the applications you can run. For PCs, the most popular operating systems are DOS, OS/2, and Windows, but others are available, such as Linux.

History of Operating System

The history of computer operating systems recapitulates to a degree the recent history of computer hardware.

Operating systems (OSes) provide a set of functions needed and used by most application-programs on a computer, and the necessary linkages for the control and synchronization of the computer's hardware. On the first computers, without an operating system, every program needed the full hardware specification to run correctly and perform standard tasks, and its own drivers for peripheral devices like printers and card-readers. The growing complexity of hardware and application-programs eventually made operating systems a necessity

Systems on IBM hardware: The state of affairs continued until the 1960s when IBM, already a leading hardware vendor, stopped the work on existing systems, and put all the effort

into developing the System/360 series of machines, all of which used the same instruction architecture. IBM intended to develop also a single operating system for the new hardware, the OS/360. The problems encountered in the development of the OS/360 are legendary, and are described by Fred Brooks in The Mythical Man-Month— a book that has become a classic of software engineering. Because of performance differences across the hardware range and delays with software development, a whole family of operating systems were introduced instead of a single OS/360.

IBM wound up releasing a series of stop-gaps followed by three longer-lived operating systems:

∙OS/MFT for mid-range systems. This had one successor, OS/VS1, which was discontinued in the 1980s.

∙OS/MVT for large systems. This was similar in most ways to OS/MFT (programs could be ported between the two without being re-compiled), but has more sophisticated memory management and a time-sharing facility, TSO. MVT had several successors including the current z/OS.

∙DOS/360 for small System/360 models had several successors including the current z/VSE. It was significantly different from OS/MFT and OS/MVT.

∙IBM maintained full compatibility with the past, so that programs developed in the sixties can still run under z/VSE (if developed for DOS/360) or z/OS (if developed for OS/MFT or OS/MVT) with no change.

The case of 8-bit home computers and game consoles

Home computers: Although most small 8-bit home computers of the 1980s, such as the Commodore 64, the Atari 8-bit, the Amstrad CPC, ZX Spectrum series and others could use a disk-loading operating system, such as CP/M or GEOS they could generally work without one. In fact, most if not all of these computers shipped with a built-in BASIC interpreter on ROM, which also served as a crude operating system, allowing minimal file management operations (such as deletion, copying, etc.) to be performed and sometimes disk formatting, along of course with application loading and execution, which sometimes required a non-trivial command sequence, like with the Commodore 64.

The fact that the majority of these machines were bought for entertainment and educational purposes and were seldom used for more "serious" or business/science oriented applications, partly explains why a "true" operating system was not necessary.

Another reason is that they were usually single-task and single-user machines and shipped with minimal amounts of RAM, usually between 4 and 256 kilobytes, with 64 and 128 being common figures, and 8-bit processors, so an operating system's overhead would likely compromise the performance of the machine without really being necessary.

Even the available word processor and integrated software applications were mostly selfcontained programs which took over the machine completely, as also did video games.

Game consoles and video games: Since virtually all video game consoles and arcade cabinets designed and built after 1980 were true digital machines (unlike the analog Pong clones and derivatives), some of them carried a minimal form of BIOS or built-in game, such as the ColecoVision, the Sega Master System and the SNK Neo Geo. There were however successful designs where a BIOS was not necessary, such as the Nintendo NES and its clones.

Modern day game consoles and videogames, starting with the PC-Engine, all have a minimal BIOS that also provides some interactive utilities such as memory card management, Audio or Video CD playback, copy protection and sometimes carry libraries for developers to use etc. Few of these cases, however, would qualify as a "true" operating system.

The most notable exceptions are probably the Dreamcast game console which includes a minimal BIOS, like the PlayStation, but can load the Windows CE operating system from the game disk allowing easily porting of games from the PC world, and the Xbox game console, which is little more than a disguised Intel-based PC running a secret, modified version of Microsoft Windows in the background. Furthermore, there are Linux versions that will run on a Dreamcast and later game consoles as well.

Long before that, Sony had released a kind of development kit called the Net Yaroze for its first PlayStation platform, which provided a series of programming and developing tools to be used with a normal PC and a specially modified "Black PlayStation" that could be interfaced with a PC and download programs from it. These operations require in general a functional OS on both platforms involved.

In general, it can be said that videogame consoles and arcade coin operated machines used at most a built-in BIOS during the 1970s, 1980s and most of the 1990s, while from the PlayStation era and beyond they started getting more and more sophisticated, to the point of requiring a generic or custom-built OS for aiding in development and expandability.

The personal computer era: Apple, PC/MS/DR-DOS and beyond

The development of microprocessors made inexpensive computing available for the small business and hobbyist, which in turn led to the widespread use of interchangeable hardware components using a common interconnection (such as the S-100, SS-50, Apple II, ISA, and PCI buses), and an increasing need for 'standard' operating systems to control them. The most important of the early OSes on these machines was Digital Research's CP/M-80 for the 8080 / 8085 / Z-80 CPUs. It was based on several Digital Equipment Corporation operating systems, mostly for the PDP-11 architecture. Microsoft's first Operating System, M-DOS, was designed along many of the PDP-11 features, but for microprocessor based system. MS-DOS (or PC-DOS when supplied by IBM) was based originally on CP/M-80. Each of these machines had a small boot program in ROM which loaded the OS itself from disk. The BIOS on the IBM-PC class machines was an extension of this idea and has accreted more features and functions in the 20 years since the first IBM-PC was introduced in 1981.

The decreasing cost of display equipment and processors made it practical to provide graphical user interfaces for many operating systems, such as the generic X Window System that is provided with many UNIX systems, or other graphical systems such as Microsoft Windows, the RadioShack Color Computer's OS-9 Level II/MultiVue, Commodore's AmigaOS, Apple's Mac OS, or even IBM's OS/2. The original GUI was developed at Xerox Palo Alto Research Center in the early '70s (the Alto computer system) and imitated by many vendors.

LECTURE 4. FUNCTIONS OF TCP/IP REFERENCE MODEL.

TCP/IP is een verzamelnaam voor de reeks netwerkprotocollen die voor een grote meerderheid van de netwerkcommunicatie tussen computers instaan. Hetinternet is het grootste en bekendste TCP/IP-netwerk. De naam TCP/IP is een samentrekking van de twee bekendste protocollen die deel uit maken van de TCP/IP-protocolstack (= protocolstapel): het Transmission Control Protocol (TCP) en het internetprotocol (IP). TCP/IP wordt uitgesproken als "TCP over IP" of Geschiedenis

Het internet is een open netwerk. Op dit netwerk maakt men gebruik van het TCP/IP-protocol om gegevens uit te wisselen. TCP/IP is een pakketgeschakeld protocol waarbij de gegevens in kleine pakketjes onafhankelijk van elkaar worden verzonden. De communicatiesoftware plaatst de pakketten weer in de juiste volgorde, detecteert eventuele fouten in de ontvangst om indien nodig bepaalde pakketten opnieuw te vragen totdat alle pakketten ontvangen zijn.

Deze manier van werken liet toe om bij de voorloper van internet, ARPANET, informatie in kleine pakketjes te versturen langs verschillende wegen. Zoals zo vaak ging het hier om de oplossing voor een militair probleem. In geval van een oorlog, en bij het platleggen van sommige computers in een netwerk, was het nodig dat de overige computers toch hun gegevens konden blijven uitwisselen. Was een deel van het netwerk er niet meer, dan werden de gegevens langs een andere weg naar elkaar toegestuurd. Dit maakte het netwerk minder kwetsbaar. De doelstelling van de militairen was een netwerk dat altijd bleef werken.

Toch werd na een tijd dit netwerk te licht bevonden en zijn de militairen overgestapt naar MILnet. Van toen af werd dit protocol tussen de verschillende universiteiten die met elkaar verbonden waren gemeengoed.

Kenmerken

Het internet is een zogenaamd pakketgeschakeld netwerk, zonder garantie op enige service. Een pakketje gegevens kan zonder meer verloren gaan, sterker, bij overbelasting van een bepaalde lijn wordt zelfs aangeraden pakketjes weg te gooien. Over dit onbetrouwbare netwerk wordt met behulp van het TCP-protocol een ogenschijnlijk betrouwbare dienst gelegd, waarbij TCP in de gaten houdt of pakketjes (in de juiste volgorde) aankomen, en indien niet, geen bevestiging (acknowledge) stuurt. Indien bij de zender een welbepaalde wachttijd (timeout) verstrijkt, zonder dat er een bevestiging binnen is, dan stuurt deze het pakketje opnieuw.

Vanwege deze kenmerken is TCP/IP erg geschikt voor netwerkdiensten waar geen garantie over de zekerheid en timing vereist is wanneer bepaalde data aan dient te komen. Bijvoorbeeld, bij het downloaden van een fotootje van internet, maakt het niet uit dat er door pakketverlies enige data verloren gaat, zolang dit door TCP maar gecorrigeerd wordt.

Bij een telefoongesprek gelden heel andere wensen. Hier is gewenst dat ieder pakketje exact op het juiste moment aankomt. Pakketjes dienen liefst niet weggegooid te worden, maar als dat toch gebeurt, is het zinloos om ze opnieuw te verzenden, de hapering in het geluid heeft dan al plaatsgevonden. Hiervoor kan dan weer gebruikgemaakt worden van het UDP-protocol dat losse pakketjes zendt en zo onder de herverzend-eigenschappen van TCP uitkomt. Omdat echter nog steeds geen enkele garantie bestaat over de timing en zekerheid van de aankomst van gegevens zijn bepaalde eigenschappen inherent aan het systeem.