10.Stabilisation and state estimation

.pdf22/10/2004 |

10.2 Methods for constructing stabilising control laws |

415 |

Thus we can now restrict our search for feasible output feedback constants to those in the interval (Flower, Fupper). However, we still do not know when an output feedback constant F does stabilise. Let us address this by giving a result of Chen [1993]. To state the result, we need the notion of a “generalised eigenvalue.” Given matrices M1, M2 Rn×n, a generalised eigenvalue is a number λ C that satisfies

det(M1 − λM2) = 0.

We denote by σ(M1, M2) the set of generalised eigenvalues. We also recall from Section 5.5.2 the n × n Hurwitz matrix H(P ) that we may associate to a polynomial P R[s] of degree n. Note that if Q R[s] has degree less than n, then we may still define an n × n matrix H(Q) by thinking of Q as being degree n with the coe cients of the higher order terms being zero.

10.23 Theorem Let Σ = (A, b, ct, 01) be a controllable SISO linear system. Denote

P (s) = det(sIn − A), Q(s) = ctadj(sIn − A)b

with H(P ) and H(Q) the corresponding Hurwitz matrices, supposing both to be n × n. Let

σ(H(P ), −H(Q)) ∩ R = {λ1, . . . , λk}

with λ1 ≤ · · · ≤ λk. The following statements hold:

(i) |

for i {1, . . . , k}, the closed-loop system Σλi is not internally asymptotically stable; |

|

(ii) |

for |

j {1, . . . , k − 1}, the closed-loop system ΣF is internally asymptotically stable |

|

for |

all F (λj, λj+1) if and only if ΣF¯ is internally asymptotically stable for some |

|

¯ |

|

|

F (λj, λj+1). |

|

We do not present the proof of this theorem, as the elements needed to get the proof underway would take us too far afield. However, let us indicate how one might use the theorem.

10.24 Method for generating stabilising output feedback constants Suppose you are given Σ = (A, b, ct, D).

1. Compute

P (s) = det(sIn − A), Q(s) = ctadj(sIn − A)b.

2.Compute Flower and Fupper as in Proposition 10.22.

3.Compute H(P ) and H(Q) as in Theorem 10.23.

4.Compute and order {λ1, . . . , λk} = σ(H(P ), −H(Q)) ∩ R.

5.If (λj, λj+1) 6 (Flower, Fupper), then any F (λj, λj+1) is not stabilising.

6. |

¯ |

|

If (λj, λj+1) (Flower, Fupper), then choose F (λj, λj+1) and check whether PF¯ (s) = |

||

|

P (s) + F Q(s) is Hurwitz (use, for example, the Routh/Hurwitz criterion). |

|

7. |

If PF¯ is Hurwitz, then PF is Hurwitz for any F (λj, λj+1). |

|

|

Let us try this out on a simple example. |

|

416 |

10 Stabilisation and state estimation |

22/10/2004 |

|||||

10.25 Example ((10.19) cont’d) We resume our example where |

|||||||

|

A = −1 |

0 , |

b = 1 , |

c = 4 , |

D = 01. |

||

|

0 |

1 |

|

|

0 |

3 |

|

We compute |

|

|

|

|

|

|

|

|

P (s) = s2 + 1, Q(s) = 4s + 3, |

||||||

which gives |

|

|

|

|

Fupper = ∞. |

|

|

|

|

Flower = 0, |

|

||||

One may also determine that |

|

0 1 |

, H(Q) = 0 3 . |

||||

|

H(P ) = |

||||||

|

|

|

0 |

1 |

|

4 |

0 |

We then compute

det(H(P ) + λH(Q)) = 12s2 + 4s.

Thus

σ(H(P ), −H(Q)) ∩ R = −13 , 0 ,

so λ1 = −13 and λ2 = 0. We note that (λ1, λ2) 6 (Flower, Fupper), so from Theorem 10.23 we may only conclude that there are no stabilising output feedback constants in (λ1, λ2). However, note that in this example, any F (Flower, Fupper) is actually stabilising. Thus while Theorem 10.23 provides a way to perhaps obtain some stabilising output feedback constants, it does not provide all of them. This further points out the genuine di culty of developing a satisfactory theory for stabilisation using static output feedback, even in the SISO context.

10.26 Remark While the above discussion suggests that obtaining a fully-developed theory for stabilisation by static output feedback may be troublesome, in practice, things are not as grim as they have been painted out to be, at least for SISO systems. Indeed, the matter of finding stabilising output feedback constants is exactly the problem of finding constants F so that the polynomial

PF (s) = P (s) + F Q(s)

is Hurwitz. A standard way to do this is using root-locus methods developed by Evans [1948, 1950], and presented here in Chapter 11. It is also possible to use the Nyquist criterion to obtain suitable values for F . Note, however, that both of these solution methods, while certainly usable in practice, are graphical, and do not involve concrete formulas, as do the corresponding formulas for static state feedback in Section 10.2.1 and for dynamic output feedback in Section 10.2.3. Thus one’s belief in such solutions methods is exactly as deep as

one’s trust in graphical methods. |

|

10.2.3 Stabilising dynamic output feedback controllers |

In this section we will show |

that it is always possible to construct a dynamic output feedback controller that renders the resulting closed-loop system internally asymptotically stable, provided that the plant is stabilisable and detectable. This is clearly interesting. First of all, we should certainly expect that we will have to make the assumption of stabilisability and detectability. If these assumptions are not made, then it is not hard to believe that there will be no way to make the

22/10/2004 10.2 Methods for constructing stabilising control laws 417

plant internally asymptotically stable under feedback since the plant has internal unstable dynamics that are neither controlled by the input nor observed by the output.

First we recall that if ΣP = (AP , bP , ctP , DP ) is stabilisable and detectable there exists two vectors f, ` Rn with the property that the matrices AP − bP ft and AP − `ctP are Hurwitz. With this as basis, we state the following result.

10.27 Theorem Let ΣP = (AP , bP , ctP , DP ) be a SISO linear plant. Then the following statements are equivalent:

(i)ΣP is stabilisable and detectable;

(ii)there exists a SISO linear controller ΣC = (AC, bC, ctC, DC) with the property that the closed-loop system is internally asymptotically stable.

Furthermore, if either of these equivalent conditions is satisfied and if f, ` Rn have the property that the matrices AP − bP ft and AP − `ctP are Hurwitz, then the SISO linear controller ΣC defined by

AC = AP − `ctP − bP ft + DP `ft,

b |

C |

= `, |

ct |

= ft, |

D = 0 |

|

|

C |

|

1 |

has the property that the closed-loop system is internally asymptotically stable.

Proof (i) = (ii) Using Proposition 6.56 we compute the closed-loop system matrix Acl to

be |

AP |

|

|

|

bP ft |

|

|

|

|

|

|

|

|

|

|

|

|||

Acl = −`cPt |

|

AP − `cPt − bP ft . |

|

||||||

Now define T R2n×2n by |

|

|

|

|

|

|

|

|

|

T = |

In In |

= |

|

T −1 |

= |

In −In . |

|||

|

0n In |

|

|

|

|

0n In |

|

||

One readily computes that |

|

|

|

|

|

|

|

|

|

T AclT −1 = |

|

AP − `ct Pt |

|

0n |

t . |

|

|||

|

|

|

−`cP |

A − bf |

|

|

|||

In particular,

spec(Acl) = spec(AP − `ctP ) spec(A − bft),

which says that the eigenvalues of Acl are in C−, as desired, provided we choose f and ` appropriately. This also proves the second assertion of the theorem.

(ii) = (i) If ΣP is neither stabilisable nor detectable, it is also neither controllable nor observable. Therefore, by Theorem 2.41 we may suppose that AP , bP , and cP have the form

AP = |

0k×j |

A22 |

0j×` |

A24 , |

bP = |

b2 |

, |

cP = |

c2 , |

|||||||||

|

|

A11 |

A12 |

A13 |

A14 |

|

|

|

b1 |

|

|

|

|

0j |

|

|||

|

0 |

|

`×j |

0 `×k |

0 33 |

A34 |

|

0 |

` |

|

|

c` |

||||||

|

|

|

m×j m×k m×` |

44 |

|

|

|

|

m |

|

|

|

4 |

|

||||

|

|

0 |

|

0 |

A |

A |

|

|

|

0 |

|

|

|

|

0 |

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

for suitable j, k, `, and m. The assumption that ΣP is neither stabilisable nor detectable is equivalent to saying that the matrix A33 is not Hurwitz. First let us suppose that A33 has

418 |

|

|

10 Stabilisation and state estimation |

22/10/2004 |

|||

a real eigenvaluet |

λ |

|

+ with v an eigenvector. Consider a controller SISO linear system |

||||

C |

|||||||

ΣC = (AC, bC, cC |

, DC), giving rise to the closed-loop equations |

|

|||||

|

|

|

x˙ P (t) = AP xP (t) + bP u(t) |

|

|||

|

|

|

x˙ C(t) = ACxC(t) − bCy(t) |

|

|||

|

|

|

y(t) = cPt xP (t) + DP u(t) |

|

|||

|

|

|

u(t) = cCt xC(t) − DCy(t). |

|

|||

If we choose initial conditions for xP and xC as |

|

|

|||||

|

|

|

xP (0) = |

0k |

, |

xC(0) = 0, |

|

|

|

|

|

0j |

|

|

|

|

|

|

0v |

|

|

|

|

|

|

|

|

m |

|

|

|

|

|

|

|

|

|

|

|

the resulting solution to the closed-loop equations will simply be |

|

||||||

|

|

|

xP (t) = eλt |

0k , |

xC(t) = 0. |

|

|

|

|

|

|

0j |

|

|

|

|

|

|

|

0v |

|

|

|

|

|

|

|

m |

|

|

|

|

|

|

|

|

|

|

|

In particular, the closed-loop system is not internally asymptotically stable. If the eigenvalue

in C+ is not real, obviously a similar argument can be constructed.

10.28 Remark The stabilising controller ΣC constructed in the theorem has the same order, i.e., the same number of state variables as the plant ΣP . It can be expected that frequently one can do much better than this and design a significantly “simpler” controller. In Section 10.3 we parameterise (almost) all stabilising controllers which includes the one of Theorem 10.27 as a special case.

This gives the following interesting corollary that relates to the feedback interconnection of Figure 10.2. Note that this answer the question raised at the end of Section 6.3.1.

rˆ(s) |

RC (s) |

RP (s) |

yˆ(s) |

|

− |

|

|

Figure 10.2 Unity gain feedback loop

10.29 Corollary Let RP R(s) which we assume to be represented by its c.f.r. (NP , DP ). Then it is possible to compute a controller rational function RC with the property that the closed-loop interconnection of Figure 10.2 is IBIBO stable.

Proof This follows immediately from Theorem 10.27 since the canonical minimal realisation of RP is controllable and observable, and so stabilisable and detectable.

22/10/2004 |

10.2 Methods for constructing stabilising control laws |

419 |

Let us see how this works in an example. We return to the example of Section 6.4.3, except now we do so with a methodology in mind.

10.30 Example (Example 6.59) In this example we had

0 |

0 |

m |

|

0 |

|

AP = 0 |

1 , |

bP = 01 |

, |

cP = 1 , DP = 01. |

|

|

|

|

|

|

|

To apply Theorem 10.27 we need to design vectors f and ` so that AP −bP ft and AP −`ctP are Hurwitz. To do this, it is required that (AP , bP ) be stabilisable and that (AP , cP ) be detectable. But we compute

C(A, b) = |

1 |

0 |

, |

O(A, c) = |

0 |

1 . |

|

0 |

1 |

|

|

1 |

0 |

Thus ΣP is controllable (and hence stabilisable) and observable (and hence detectable). Thus we may use Proposition 10.13 to construct f and Corollary 10.17 to construct `. In each case, we ask that the characteristic polynomial of the closed-loop matrix be α(s) = s2 + 2s2 which has roots −1 ± i. Thus we define

ft = |

0 · · · |

0 1 |

C(A, b)−1α(A) = |

2m |

2m |

|||||||||

` |

= |

0 |

|

|

|

O(A |

, c) |

−1 |

α(A |

) |

|

2 |

|

|

t |

|

|

· · · |

0 1 |

|

t |

|

t |

|

= |

2 . |

|||

|

|

|

|

|

|

|

|

|

|

|

|

|||

Here, following Proposition 10.13, we have used

α(A) = A2 + 2A + 2I2, α(At) = (At)2 + 2At + 2I2.

Using Theorem 10.27 we then ascertain that

|

|

− |

P − |

|

|

−4 |

−2 |

AC = AP |

|

`ct |

bP ft = −2 |

1 |

|||

bC = ` = |

2 |

, cC |

= f |

|

= 2m 2m . |

||

|

|

2 |

t |

|

t |

|

|

Let us check that the closed-loop system, as defined by Proposition 6.56, is indeed internally asymptotically stable. A straightforward application of Proposition 6.56 gives

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0 |

1 |

0 |

0 |

|

|

|

|

|

|

0 |

0 |

2 |

2 |

|

|

|

Acl = |

−2 |

0 |

−4 |

|

2 |

, |

||

|

2 |

0 |

2 |

1 |

|||||

|

|

|

|

|

|

|

|

|

|

bcl = |

0 |

, |

|

− |

|

− |

− |

Dcl = 01. |

|

|

ccl = 1 0 0 0 , |

||||||||

|

0 |

|

t |

|

|

|

|

|

|

|

2 |

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

2

One may check that the eigenvalues of the Acl are {−1 ± i} where each root is has algebraic

multiplicity 2. As predicted by the proof of Theorem 10.27, these are the eigenvalues of

AP − bP ft and AP − `ctP .

Now let us look at this from the point of view of Corollary 10.29. Instead of thinking of the plant as a SISO linear system ΣP , let us think of it as a rational function RP (s) =

420 |

10 Stabilisation and state estimation |

22/10/2004 |

TΣP (s) = ms1 2 . This is not, of course, a BIBO stable transfer function. However, if we use the controller rational function

4m(2s + 1) s2 + 4s + 8 ,

then we are guaranteed by Corollary 10.29 that the closed-loop configuration of Figure 10.2 is IBIBO stable. In Figure 10.3 is shown the Nyquist plot for RL = RCRP . Note that the

|

4 |

|

|

|

|

|

2 |

|

|

|

|

Im |

0 |

|

|

|

|

|

-2 |

|

|

|

|

|

-4 |

|

|

|

|

|

-4 |

-2 |

0 |

2 |

4 |

|

|

|

Re |

|

|

Figure 10.3 Nyquist plot for dynamic output feedback problem

Nyquist criterion is indeed satisfied. However, one could certainly make the point that the gain and phase margins could use improvement. This points out the general drawback of the purely algorithmic approach to controller design that is common to all of the algorithms we present. One should not rely on the algorithm to produce a satisfactory controller out of the box. The control designer will always be able to improve an initial design by employing the lessons only experience can teach.

10.3 Parameterisation of stabilising dynamic output feedback controllers

The above discussion of construction stabilising controllers leads one to a consideration of whether it is possible to describe all stabilising controllers. The answer is that it is, and it is best executed in the rational function framework. We look at the block diagram configuration of Figure 10.4. We think of the plant transfer function RP as being proper and fixed. The objective is to find all controller transfer functions RC so that the interconnection is IBIBO stable. This will happen when the transfer functions between all inputs and outputs

are in RH∞+ . The four relevant transfer functions are |

|

|

|||

T1 = |

1 |

, T2 = |

RC |

, |

|

1 + RCRP |

1 + RCRP |

||||

|

RP |

|

RCRP |

(10.10) |

|

T3 = |

, T4 = |

. |

|||

1 + RCRP |

1 + RCRP |

||||

|

|

|

|||

Let us first provide a useful properties rational functions in RH+∞.

22/10/2004 |

10.3 Parameterisation of stabilising dynamic output feedback controllers |

421 |

rˆ(s) |

RC (s) |

RP (s) |

yˆ(s) |

|

− |

|

|

Figure 10.4 The block diagram configuration for the investigation of stabilising controller parameterisation

10.3.1 More facts about RH+ |

It is useful to investigate in more detail some algebraic |

|

+ |

∞ |

|

properties of RH∞. These will be useful in this section, and again in Chapter 15. Many of our results in this section may be found in [Fuhrmann 1996].

For a rational function R R(s), the c.f.r. is a representation of R by the quotient of coprime polynomials where the denominator polynomial is monic. Let us look at another way to represent rational functions. We shall say that R1, R2 RH+∞ are coprime if they have no common zeros in C+ and if at least one of them is not strictly proper.

10.31 Definition A coprime fractional representative of R R(s) is a pair (RN , RD) with the following properties:

(i)RN , RD RH+∞;

(ii)RD and RN are coprime in RH+∞;

(iii) R = |

RN |

|

RD . |

The following simple result indicates that any rational function has a coprime fractional representative.

10.32 Proposition If R R(s) then R has a coprime fractional representative.

Proof Let (N, D) be the c.f.r. of R and let k = max{deg(D), deg(N)}. Then (RN , RD) is a coprime fractional representative where

|

N(s) |

|

D(s) |

|

|

RN (s) = |

|

, RD(s) = |

|

. |

|

(s + 1)k |

(s + 1)k |

||||

Note that unlike the c.f.r., there is no unique coprime fractional representative. However, it will be useful for us to come up with a particular coprime fractional representative. Given a polynomial P R[s] we may factor it as P (s) = P−(s)P+(s) where all roots of P− lie in C− and all roots of P+ lie on C+. This factorisation is unique except in a trivial way; the coe cient of the highest power of s may be distributed between P− and P+ in an arbitrary

way. Now let (N, D) be the c.f.r. for R R(s). We then have |

|

|

|

|

|

|

|||||||

|

N(s) |

|

N−(s)N+(s) |

|

N−(s)(s + 1)`+k |

|

N+(s) |

|

|

|

|||

R(s) = |

D(s) |

= |

D(s) |

|

= |

|

D(s) |

|

(s + 1)`+k |

|

(10.11) |

||

|

|

|

|

|

|

|

|

|

|

N |

(s)(s+1)`+k |

+ |

|

where ` = deg(N+) and k is the relative degree of (N, D). Note that |

− |

|

D(s) |

RH∞ |

|||||||||

and that N−(s)(s+1)`+k RH+∞. Generally, if Q RH+∞ and Q−1 RH+∞, then we say that Q is invertible in RH+∞. The formula (10.11) then says that any rational function R RH+∞

422 |

10 Stabilisation and state estimation |

22/10/2004 |

is the product of a function invertible in RH+∞, and a function in RH+∞ all of whose zeros lie

in C+.

The following result introduces the notion of the “coprime factorisation.” This will play for us an essential rˆole in determining useful representations for stabilising controllers.

|

Theorem Rational functions |

|

+ |

|

|

10.33 |

R1, R2 |

RH∞ are coprime if and only if there exists |

|||

+ |

|||||

ρ1, ρ2 RH∞ so that |

ρ1R1 |

+ ρ2R2 = 1. |

(10.12 ) |

||

|

|

||||

We call (ρ1, ρ2) a coprime factorisation of (R1, R2).

Proof First suppose that ρ1, ρ2 RH+∞ exist as stated. Clearly, if z C+ is a zero of, say, R1 is cannot also be a zero of R2 as this would contradict (10.12). What’s more, if both R1 and R2 are strictly proper then we have

s→∞ |

1 |

1 |

2 |

2 |

(s) |

|

lim |

ρ |

(s)R |

(s) + ρ |

(s)R |

= 0, |

again in contradiction with (10.12).

Now suppose that R1, R2 RH+∞ are coprime and suppose that R1 is not strictly proper. Let (N1, D1) and (N2, D2) be the c.f.r.’s for R1 and R2. Denote σ = (s+1), let `j = deg(Nj,+), and let kj be the relative degree of (Nj, Dj), j = 1, 2. Thus k1 = 0. Write

|

|

|

|

|

|

|

|

˜ |

|

Nj,+ |

|

|

|

||||

|

|

|

|

|

Rj = Rj |

σ`j+kj |

, j = 1, 2, |

|

|||||||||

|

˜ |

|

|

|

|

|

|

+ |

|

. Suppose that (˜ρ1, ρ˜2) are a coprime factorisation |

|||||||

where Rj, j = 1, 2, is invertible in RH |

∞ |

||||||||||||||||

|

N1,+ |

|

N2,+ |

|

|

|

|

|

|

|

|

|

|

|

|||

of ( |

|

, |

|

). Then |

|

|

|

|

|

|

|

|

|

|

|

|

|

σ`1+k1 |

σ`2+k2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

ρ˜1 |

N1,+ |

+ ρ˜2 |

N2,+ |

= 1 |

|

|

||||||

|

|

|

|

|

σ`1 |

σ`2+k2 |

|

|

|||||||||

|

|

|

|

= |

|

˜−1 ˜ |

N1,+ |

|

˜−1 ˜ |

N2,+ |

|

||||||

|

|

|

|

ρ˜1R1 R1 |

σ`1 |

+ ρ˜2R2 R2 |

σ`2+k2 |

= 1. |

|||||||||

˜ ˜ + ˜−1 ˜−1

Since R1 and R2 are invertible in RH∞ this shows that (˜ρ1R1 , ρ˜2R2 ) is a coprime factorisation of (R1, R2). Thus we can assume, without loss of generality, that R1 and R2 are of

the form |

P1 |

|

|

P2 |

|

|

R1 = |

, R2 |

= |

, |

|||

σ`1 |

σ`2+k |

where the coprime polynomials P1 and P2 have all roots in C+, deg(Pj) = `j, j = 1, 2, and `2 ≤ `1. By Lemma C.4 we may find polynomials Q1, Q2 R[s] so that

|

|

|

|

|

Q1P1 + Q2P2 = σ`1+`2+k, |

|

|||||||||||||||||

and with deg(Q2) < deg(P1). Thus we have |

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

|

|

|

|

|

Q1 P1 |

+ |

|

Q2 P2 |

= 1. |

|

(10.13) |

||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

σ`1 σ`2+k |

|||||||||||

|

|

|

|

|

σ`2+k σ`1 |

|

|

|

|

|

|||||||||||||

Since R2 RH∞+ , |

P2 |

is proper. Since deg(Q2) < deg(P1), |

Q2 |

is strictly proper. Therefore, |

|||||||||||||||||||

σ`2+k |

σ`1 |

||||||||||||||||||||||

the second term in (10.13) is strictly proper. Since |

|

P1 |

is not strictly proper, it then follows |

||||||||||||||||||||

|

` |

||||||||||||||||||||||

|

Q1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

σ 1 |

|

|

|

|

||

that |

is also not strictly proper since we must have |

|

|||||||||||||||||||||

σ`2+k |

|

||||||||||||||||||||||

|

|

|

|

s→∞ |

Q1 |

P1 |

+ |

Q2 |

|

P2 |

|

|

|

||||||||||

|

|

|

|

σ`2+k |

|

σ`1 |

σ`1 |

|

σ`2+k |

|

|||||||||||||

|

|

|

|

lim |

|

|

|

|

|

|

|

|

|

|

|

|

|

= 1 |

|

||||

22/10/2004 |

10.3 Parameterisation of stabilising dynamic output feedback controllers |

423 |

||||||||

and |

s→∞ σ`1 |

σ`2+k |

|

|

|

|||||

|

|

|

||||||||

|

lim |

|

Q2 |

|

P2 |

= 0. |

|

|

||

|

|

|

|

|

|

|

|

|||

Therefore, if we take |

Q1 |

|

|

|

Q2 |

|

|

|||

|

ρ1 = |

|

, ρ2 = |

, |

|

|||||

|

σ`2+k |

|

σ`1 |

|

||||||

we see that the conditions of the theorem are satisfied. |

|

|

||||||||

The matter of determining rational functions ρ1 and ρ2 in the lemma is not necessarily straightforward. However, in the next section we shall demonstrate a way to do this, at least if one can find a single stabilising controller. The following corollary, derived directly from the computations of the proof of Theorem 10.33, declares the existence of a particular coprime factorisation that will be helpful in the course of the proof of Theorem 10.37.

10.34 Corollary If R1, R2 RH+∞ are coprime with R1 not strictly proper, then there exists a coprime factorisation (ρ1, ρ2) of (R1, R2) having the property that ρ2 is strictly proper.

Proof As in the initial part of the proof of Theorem 10.33, let us write

|

|

˜ |

P1 |

|

˜ |

P2 |

|

|

|

R1 = R1 |

σ`1 |

, R2 |

= R2 |

σ`2+k |

, |

˜ |

˜ |

+ |

|

|

= deg(Pj), j = 1, 2. In the proof of |

||

where R1 |

and R2 |

are invertible in RH∞ and where `j |

|||||

Theorem 10.33 were constructed ρ˜1 and ρ˜2 (in the proof of Theorem 10.33, these are the final ρ1 and ρ2) with the property that

ρ˜1 |

P1 |

+ ρ˜2 |

P2 |

= 1 |

σ`1 |

σ`2+k |

˜−1

and ρ2 is strictly proper. Now we note that if ρj = Rj ρ˜j, j = 1, 2, then (ρ1, ρ2) is a coprime

˜−1 |

+ |

factorisation of (R1, R2) and that ρ2 is strictly proper since R2 |

RH∞, and so is proper. |

10.3.2 The Youla parameterisation Now we can use Theorem 10.33 to ensure a means of parameterising stabilising controllers by a single function in RH+∞. Before we begin, let us establish some notation that we will use to provide an important preliminary results. We let RP R(s) be proper with coprime fractional representative (P1, P2), and let (ρ1, ρ2) be a coprime factorisation for (P1, P2). We call θ RH+∞ admissible for the coprime fractional representative (P1, P2) and the coprime factorisation (ρ1, ρ2) if

1. θ 6= ρ2 , and

P1

|

|

2. lim ρ2(s) − θ(s)P1(s) 6= 0.

s→∞

Now we define

Spr(P1, P2, ρ1, ρ2) = |

ρ2 |

|

θP1 |

|

θ admissible . |

|

|

|

ρ1 |

+ θP2 |

|

|

|

|

|

|

− |

|

|

|

|

|

|

|

|

|

|

At this point, this set depends on the choice of coprime factorisation (ρ1, ρ2). The following lemma indicates that the set is, in fact, independent of this factorisation.

424 |

10 |

Stabilisation and state estimation |

22/10/2004 |

||||||||

10.35 Lemma Let RP |

R(s) be proper with coprime fractional representative |

(P1, P2). If |

|||||||||

(ρ1, ρ2) and (˜ρ1, ρ˜2) are coprime factorisations for (P1, P2), then the map |

|

||||||||||

|

|

|

ρ1 + θP2 |

|

|

˜ |

|

||||

|

|

|

7→ |

ρ˜1 + θ(θ)P2 |

|

|

|||||

|

|

ρ |

2 − |

θP |

1 |

|

˜ |

|

|||

|

|

|

|

|

|

|

ρ˜2 − θ(θ)P1 |

|

|||

from Spr(P1, P2, ρ1, ρ2) to Spr(P1, P2, ρ˜1, ρ˜2) is a bijection if |

|

||||||||||

|

|

|

˜ |

|

|

|

|

|

|

|

|

|

˜ |

|

θ(θ) = θ + ρ1ρ˜2 − ρ2ρ˜1. |

|

|||||||

|

+ |

|

|

|

|

|

|

|

|||

Proof First note that θ(θ) RH∞ so the map is well-defined. To see that the map is a |

|||||||||||

bijection, it su ces to check that the map |

|

|

|

|

|||||||

|

|

|

|

|

˜ |

|

|

|

˜ |

|

|

|

|

|

ρ˜1 + θP2 |

|

7→ |

ρ1 + θ(θ)P2 |

|

|

|||

|

|

ρ˜2 − |

˜ |

|

|

˜ |

|

||||

|

|

θP1 |

|

ρ2 − θ(θ)P1 |

|

||||||

is its inverse provided that |

|

|

˜ |

|

˜ |

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|||

|

|

|

θ(θ) = θ + ρ˜1ρ2 − ρ˜2ρ1. |

|

|||||||

This is a straightforward, if slightly tedious, computation. |

|||||||||||

The upshot of the lemma is that the set Spr(P1, P2, ρ1, ρ2) is independent of ρ1 and ρ2. Thus let us denote it by Spr(P1, P2). Now let us verify that this set is in fact only dependent

on RP , and not on the coprime fractional representative of RP . |

|

||||||

|

|

|

|

|

|

˜ |

˜ |

10.36 Lemma If RP R(s) is proper, and if (P1, P2) and (P1 |

, P2) are coprime fractional |

||||||

|

|

˜ |

|

˜ |

|

|

|

representatives of RP , then Spr(P1, P2) = Spr(P1 |

, P2). |

|

|

||||

Proof Since we have |

|

|

|

˜ |

|

|

|

|

P1 |

|

|

|

|

||

RP = |

= |

P1 |

|

|

|||

P2 |

|

˜ |

|

|

|

||

|

|

P2 |

|

|

|||

˜ |

|

|

+ |

|

, ρ2) is a coprime factorisation of |

||

it follows that Pj = UPj for an invertible U |

RH∞. If (ρ1 |

||||||

−1 −1 ˜ ˜

(P1, P2) it then follows that (U ρ1, U ρ2) is a coprime factorisation of (P1, P2). We then

have |

|

|

|

θP˜ ˜1 |

|

θ admissible) |

|

||||

Spr(P˜1, P˜2) = ( ρ˜2 |

|

|

|

||||||||

|

|

|

|

˜ |

˜ |

|

|

|

|

|

|

|

ρ˜1 + θP2 |

|

|

|

|

|

|||||

|

|

− |

|

|

|

|

|

|

|

||

|

|

1 |

ρ1 + |

|

|

2 |

|

|

|

||

|

U− |

|

|

|

|

) |

|||||

( U−1ρ2 |

|

θU˜ P1 |

|

||||||||

|

|

|

|

|

|

˜ |

|

|

|

|

|

|

|

|

|

|

− |

θUP |

|

|

|

||

= |

|

|

|

|

|

|

|

|

|

θ˜ admissible |

|

|

ρ1 + θU˜ 2P2 |

|

|

|

|

|

|||||

= ( |

|

|

|

|

|

|

|

θ˜ admissible) |

|

||

ρ2 |

− |

θU˜ 2P1 |

|

|

|||||||

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

ρ1 + θP2 |

|

|

|

|

|

|

||||

= |

|

|

|

|

|

θ admissible |

|

||||

ρ2 |

− |

θP1 |

|

||||||||

|

|

|

|

||||||||

= Spr(P1, P2), |

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

as desired.

Now we are permitted to denote Spr(P1, P2) simply by Spr(RP ) since it indeed only depends on the plant transfer function. With this notation we state the following famous result due to [Youla, Jabr, and Bongiorno 1976], stating that Spr(RP ) is exactly the set of proper stabilising controllers. Thus, in particular, Spr(RP ) S (RP ).

22/10/2004 |

10.3 Parameterisation of stabilising dynamic output feedback controllers |

425 |

10.37 Theorem Consider the block diagram configuration of Figure 10.4 and suppose that RP is proper. For the plant rational function RP , let (P1, P2) be a coprime fractional representative with (ρ1, ρ2) a coprime factorisation of (P1, P2):

ρ1P1 + ρ2P2 = 1. |

(10.14 ) |

Then there is a one-to-one correspondence between the set of proper rational functions RC R(s) that render the interconnection IBIBO stable and the set

|

ρ1 |

+ θP2 |

|

|

|

|

Spr(RP ) = ρ2 |

− |

θP1 |

θ admissible . |

(10.15 ) |

||

|

|

|||||

|

|

|

|

|

|

|

Furthermore, if RP is strictly proper, every θ RH+∞ is admissible.

Proof Let us first translate the conditions (10.10) into conditions on coprime fractional representatives for RP and RC. Let (P1, P2) be a coprime fractional representative of RP as in the statement of the theorem. Also denote a coprime fractional representative of RC as (C1, C2). Then the four transfer functions of (10.10) are computed to be

T1 = |

C2P2 |

, |

T2 |

= |

C1P2 |

, |

|

C1P1 + C2P2 |

C1P1 + C2P2 |

||||||

|

C2P1 |

|

|

|

C1P1 |

(10.16) |

|

T3 = |

, |

T4 |

= |

. |

|||

C1P1 + C2P2 |

C1P1 + C2P2 |

We are thus charged with showing that these four functions are in RH+∞.

Now let θ RH+∞ and let RC be the corresponding rational function defined by (10.15).

Let

C1 = ρ1 + θP2, C2 = ρ2 − θP1.

We claim that (C1, C2) is a coprime fractional representative of RC. This requires us to show that C1, C2 RH+∞, that the functions have no common zeros in C+, and that at least one of them is not strictly proper. That C1, C2 RH+∞ follows since θ, ρ1, ρ2, P1, P2 RH+∞. A direct computation, using (10.14), shows that

C1P1 + C2P2 = 1. |

(10.17) |

From this it follows that C1 and C2 have no common zeros. Finally, we shall show that RC is proper. By Lemma 10.35 we may freely choose the coprime factorisation (ρ1, ρ2). By Corollary 10.34 we choose (ρ1, ρ2) so that ρ1 is strictly proper. Since

s→∞ ρ1(s)P1(s) + ρ2(s)P2(s) |

= 1, |

lim |

|

it follows that ρ2 is not strictly proper. Therefore, C2 = ρ2 − θP1 is also not strictly proper, provided that θ is admissible.

Now consider the case when RP is proper (i.e., the final assertion of the theorem). Note that if RP is strictly proper, then so is P1. Condition 1 of the definition of admissibility

then follows since if θ = ρ2 , then θ would necessarily be improper. Similarly, if P1 is strictly

P1

proper, it follows that lims→∞ C2(s) = ρ2(s) 6= 0. Thus condition 2 for admissibility holds. Now suppose that RC R(s) stabilises the closed-loop system so that the four transfer functions (10.10) are in RH+∞. Let (C1, C2) be a coprime fractional representative of RC.

426 |

|

|

10 Stabilisation and state estimation |

|

|

22/10/2004 |

|||

P |

+ C P |

. |

We claim that D and |

1 |

are in RH+ |

. Clearly D |

|

RH+ |

. Also, if |

Let D = C1+ 1 |

2 2 |

|

|

D |

∞ |

|

∞ |

|

|

α1, α2 RH∞ have the property that |

|

|

|

|

|

|

|||

α1C1 + α2C2 = 1,

(such functions exist by Theorem 10.33), then we have

=(α1C1 + α2C2)(ρ1P1 + ρ2P2) D D

=α1ρ1T4 + α1ρ2T2 + α2ρ1T3 + α2ρ2T1.1

By the assumption that the transfer functions T1, T2, T3, and T4 are all in RH+∞, it follows that D1 RH+∞. Thus D is proper and not strictly proper so that we may define a new coprime fractional representative for RC by (CD1 , CD2 ) so that

|

C1P1 + C2P2 = 1. |

|

|||

We therefore have |

C1 |

C2 P2 |

= 1 |

||

|

|||||

|

ρ1 |

ρ2 |

P1 |

1 |

1 |

|

P2 |

|

C1 |

−ρ1 |

|

= |

θ P1 = −C2 |

ρ2 |

1 , |

||

if we take θ = ρ2C1 − ρ1C2 RH∞+ . It therefore follows that |

|

C1 = ρ1 + θP2, C2 = ρ2 − θP1, |

|

as desired. |

|

10.38 Remarks 1. This is clearly an interesting result as it allows us the opportunity to write down all proper stabilising controllers in terms of a single parameter θ RH+∞. Note that there is a correspondence between proper controllers and those obtained in the dynamic output feedback setting. Thus the previous result might be thought of as capturing all the stabilising dynamics output feedback controllers.

2.Some authors say that all stabilising controllers are obtained by the Youla parameterisation. This is not quite correct.

3.In the case that RP is not strictly proper, one should check that all admissible θ’s give

loop gains RL = RCRP that are well-posed in the unity gain feedback configuration of Figure 10.4. This is done in Exercise E10.15.

Things are problematic at the moment because we are required to determine ρ1, ρ2 RH+∞ with the property that (10.14) holds. This may not be trivial. However, let us indicate a possible method for determining ρ1 and ρ2. First let us show that the parameter θ RH+∞ uniquely determines the controller.

10.39 Proposition Let RP be a strictly proper rational function with (P1, P2) a coprime fractional representative. Let ρ1, ρ2 RH+∞ have the property that

ρ1P1 + ρ2P2 = 1.

22/10/2004 |

10.3 Parameterisation of stabilising dynamic output feedback controllers |

427 |

|||

Then the map |

|

ρ1 |

+ θP2 |

|

|

|

θ 7→ |

|

|||

|

ρ2 |

− θP1 |

|

||

from RH+∞ into the set of stabilising controllers for the block diagram configuration of Figure 10.4 is injective.

Proof Let Φ be the indicated map from RH+∞ into the set of stabilising controllers, and suppose that Φ(θ1) = Φ(θ2). That is,

|

ρ1 + θ1P2 |

= |

ρ1 + θ2P2 |

. |

|

|

|

||

ρ2 − θ1P1 |

ρ2 − θ2P1 |

|||

This implies that |

|

|

||

− θ1θ2P1P2 + θ1P2ρ2 − θ2P1ρ1 + ρ1ρ2 = −θ1θ2P1P2 − θ1P1ρ1 + θ2P2ρ2 + ρ1ρ2 |

||||

= θ1(ρ1P1 + ρ2P2) = θ2(ρ1P1 + ρ2P2) |

|

|

||

= θ1 = θ2, |

|

|

||

as desired. |

|

|

||

Let us now see how to employ a given stabilising controller to determine ρ1 and ρ2.

10.40 Proposition Let RP be a strictly proper rational function with (P1, P2) a coprime fractional representative. If RC is a stabilising controller for the block diagram configuration of

Figure 10.4, define ρ1 and ρ2 by |

|

|

|

check |

|

|

RC |

|

1 |

|

|

ρ1 = |

|

, ρ2 |

= |

|

. |

P2 + P1RC |

P2 + P1RC |

||||

Then the following statements hold:

(i)ρ1, ρ2 RH+∞;

(ii)ρ1P1 + ρ2P2 = 1.

Proof (i) Let (C1, C2) be a coprime fractional representative for RC. Then

|

|

|

C1 |

|

|

|

|

|

||||

ρ1 |

= |

|

C2 |

|

|

|

|

|

||||

|

|

C1 |

|

|

|

|

|

|

|

|

||

|

P2 |

+ P1 |

|

|

|

|

|

|

|

|||

|

C2 |

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

||||

|

|

|

C1 |

|

|

|

|

|

||||

|

= |

|

. |

|

|

|

|

|

||||

|

C1P1 + C2P2 |

|

|

|

|

|

||||||

As in the proof of Theorem 10.37, it follows then that if D = C1P1 +C2P2 then D, |

1 |

|

|

RH+ . |

||||||||

D |

||||||||||||

+ |

+ |

|

|

|

|

|

+ |

∞ |

||||

Since C1 RH∞, it follows that ρ1 RH∞. A similar computation gives ρ2 |

RH∞. |

|

||||||||||

(ii) This is a direct computation. |

|

|

|

|

|

|

|

|

|

|

|

|

This result allows us to compute ρ1 and ρ2 given a coprime fractional representative for a plant transfer function. This allows us to produce the following algorithm for parameterising the set of stabilising controllers.

10.41 Algorithm for parameterisation of stabilising controllers Given a proper plant transfer function RP perform the following steps.

1. Determine a coprime fractional representative (P1, P2) for RP using Proposition 10.32.

428 |

10 Stabilisation and state estimation |

22/10/2004 |

2. Construct the canonical minimal realisation ΣP = (AP , bP , ctP , DP ) for RP .

3. Using Proposition 10.13, construct f Rn so that AP − bP ft is Hurwitz.

4.Using Corollary 10.17 construct ` Rn so that AP − `ctP is Hurwitz. Note that this amounts to performing the construction of Proposition 10.13 with A = AtP and b = c.

5. Using Theorem 10.27 define a stabilising controller SISO linear system ΣC =

(AC, bC, ctC, DC).

6.Define the stabilising controller rational function RC = TΣC .

7.Determine ρ1, ρ2 RH+∞ using Proposition 10.40.

8.The set of all stabilising controllers is now given by

|

ρ1 |

+ |

θP2 |

|

|

|

Spr(RP ) = ρ2 |

− |

θP1 |

θ admissible . |

|

||

|

|

|||||

Let us carry this out for an example.

10.42 Example (Example 6.59 cont’d) We return to the example where RP = ms1 2 , and perform the above steps.

1. A coprime fractional representative of RP is given by (P1, P2) where

|

|

|

1/m |

|

|

s2 |

|||

|

P1(s) = |

|

|

, P2 |

(s) = |

|

. |

||

|

(s + 1)2 |

(s + 1)2 |

|||||||

2. |

As in Example 6.59, we have |

m |

|

|

0 |

||||

|

0 |

0 |

|

||||||

|

AP = 0 |

1 , |

bP = 01 |

, |

cP = 1 , DP = 01. |

||||

3. |

In Example 10.30 we computed |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2m |

|

|

|

|

|

|

f = 2m , |

|

|

||||

4. In Example 10.30 we also computed

2 ` = 2 .

5. Using Example 10.30 we have

AC = |

−4 |

|

2 bC = |

2 |

, cC = 2m 2m |

, DC = 01. |

||

|

2 |

1 |

2 |

t |

|

|

|

|

|

− |

− |

|

|

|

|

||

6. In Example 10.30 we computed

4m(2s + 1) RC(s) = s2 + 4s + 8 .

7. From Proposition 10.40 we calculate, after some simplification,

|

|

(s + 1)2 |

(s2 |

+ 4s + 8) |

|

4m(s + 1)2(2s + 1) |

|

|||

ρ1 |

= |

|

|

|

, ρ2 |

= |

|

|

. |

|

(s2 + 2s + 2)2 |

(s2 |

+ 2s + 2)2 |

||||||||

|

|

|

|

|

||||||

22/10/2004 |

|

10.4 Strongly stabilising controllers |

|

|

429 |

|||||

8. Finally, after simplification, we see that the set of stabilising controllers is given by |

|

|||||||||

Spr(RP ) = ( |

m(s + 1)4(s2 + 4s + 8) |

|

θ(s)((s2 |

+ 2s + 2)2) |

|

θ RH∞+ ) . |

|

|||

|

|

|

|

|

|

|

|

|

|

|

|

m 4m(s + 1)4(2s + 1) + θ(s)s2((s2 |

+ 2s + 2)2) |

|

|

||||||

|

|

|

|

− |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

This is a somewhat complicated expression. It can be simplified |

by using simpler ex- |

|||||||||

|

|

|

||||||||

pressions for ρ1 and ρ2. In computing ρ1 and ρ2 above, we have merely applied our rule |

||||||||||

verbatim. Indeed, simpler functions that also satisfy ρ1P1 + ρ2P2 = 1 are |

|

finish |

||||||||

|

|

ρ1(s) = 1, ρ2(s) = m. |

|

|

|

|

||||

With these functions we compute the set of stabilising controllers to be |

|

|

||||||||

|

( θ + m(s2 + 1) |

|

θ RH∞) , |

|

|

|

||||

|

m θ(s)s2 − m(s2 + 1) |

|

|

+ |

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

which is somewhat more pleasing than our |

expression derived using our rules. |

|||||||||

|

|

|

|

|

|

|

||||

10.4 Strongly stabilising controllers

In the previous section we expressed all controllers that stabilise a given plant. Of course, one will typically not want to allow any form for the controller. For example, one might wish for the controller transfer function to itself be stable. This is not always possible, and in this section we explore this matter.

10.5 State estimation

One of the problems with using static or dynamic state feedback is that the assumption that one knows the value of the state is typically over-optimistic. Indeed, in practice, it is often the case that one can at best only know the value of the output at any given time. Therefore, what one would like to be able to do is infer the value of the state from the knowledge of the output. A moments reflection should suggest that this is possible for observable systems. A further moments reflection should lead one to allow the possibility for detectable systems. These speculations are correct, and lead to the theory of observers that we introduce in this section.

10.5.1 Observers Let us give a rough idea of what we mean by an observer before we get to formal definitions. Suppose that we have a SISO linear system Σ = (A, b, ct, D) evolving with its usual di erential equations:

x˙ = Ax(t) + bu(t)

(10.18)

y(t) = ctx(t) + Du(t).

An observer should take as input the original input u(t), along with the measured output y(t). Using these inputs, the observer constructs an estimate for the state, and we denote this estimate by xˆ(t). In Figure 10.5 we schematically show how an observer works. Our first result shows that an observer exists, although we will not use the given observer in practice.

430 |

10 Stabilisation and state estimation |

22/10/2004 |

x(t)

u(t)  plant

plant

e(t)

e(t)

−

y(t)

xˆ (t)

observer

observer

Figure 10.5 A schematic for an observer using the error

10.43 Proposition Let Σ = (A, b, ct, D) be |

an observable SISO linear system satisfy- |

||||

ing (10.18). There exists oo(s), oi(s) R[s]n×1 |

with the property that |

||||

x(t) = oo |

d |

y(t) + oi |

d |

u(t). |

|

dt |

dt |

||||

Proof In (10.18), di erentiate y(t) n − 1 times successively with respect to t, and use the equation for x˙ (t) to get

|

y(t) |

|

|

|

ct |

|

|

|

|

cDtb |

0 |

|

|

· · · |

0 |

0 |

|

u(t) |

|

||||

y(1)(t) |

|

ctA |

|

D |

|

0 |

0 |

u(1)(t) |

|||||||||||||||

|

(2) |

|

|

|

t |

|

2 |

|

|

|

t |

|

|

t |

|

|

· · · |

0 |

0 |

|

|

(2) |

|

y |

.(t) |

|

= |

c A. |

|

x(t) + |

|

c Ab. |

c.b |

|

·.· · |

|

u |

.(t) |

|

||||||||

|

|

. |

. |

||||||||||||||||||||

.. |

|

|

.. |

|

|

|

.. |

|

.. |

|

|

.. |

.. |

.. |

|

|

.. |

|

|||||

|

|

|

|

|

|

n |

− |

1 |

|

|

|

n |

2 |

n |

3 |

b |

|

|

|

|

|

|

|

y(n−1)(t) |

|

ctA |

|

|

ctA |

− |

b ctA |

− |

· · · |

ctb D u(n−1)(t). |

|||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Since Σ is observable, O(A, c) is invertible, and so we have |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||

|

|

|

|

|

y(t) |

|

|

|

|

|

cDtb |

|

|

|

0 |

|

|

|

· · · |

|

0 |

0 |

|

u(t) |

|

|

||||||

|

|

|

y(1)(t) |

|

|

|

|

|

|

D |

|

|

|

0 |

0 |

u(1)(t) |

||||||||||||||||

|

|

1 |

y |

(2) |

|

|

1 |

|

|

t |

|

|

|

|

|

t |

|

|

|

· · · |

|

0 |

0 |

|

|

(2) |

|

|

||||

x(t) = O(A, c)− |

|

.(t) |

− O(A, c)− |

|

c Ab. |

|

|

c.b ·.· · |

|

u |

|

.(t) |

||||||||||||||||||||

|

|

|

|

|

|

|

. |

. |

|

|||||||||||||||||||||||

|

|

|

|

|

|

.. |

|

|

|

.. |

|

|

|

|

.. |

|

|

|

.. |

|

.. |

.. |

.. |

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

n |

2 |

|

|

n |

3 |

b |

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

y(n−1)(t) |

|

|

ctA |

− |

|

b ctA |

− |

· · · |

ctb D u(n−1)(t), |

||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

which proves the proposition if we take |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||

|

|

|

1 |

|

|

|

|

|

|

|

|

|

D |

|

0 |

|

|

· · · |

0 |

|

0 |

|

1 |

|

|

|||||||

|

|

s |

|

|

|

|

|

|

|

ctb |

|

D |

|

0 |

|

0 |

s |

|

||||||||||||||

|

1 |

|

|

2 |

|

|

|

|

|

|

1 |

|

|

t |

|

|

|

|

t |

|

|

· · · |

0 |

|

0 |

|

|

|

2 |

|

|

|

|

s. |

|

, |

oi = −O(A, c)− |

|

c Ab. |

|

c.b |

|

|

|

s. |

. |

|||||||||||||||||||

oo = O(A, c)− |

|

|

|

|

|

·.· · . |

|

. |

||||||||||||||||||||||||

|

|

.. |

|

|

|

|

|

|

|

.. |

|

|

.. |

|

|

.. .. |

|

.. |

.. |

|

|

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

n |

2 |

|

n |

3 |

b |

|

|

|

|

|

|

|

|

|||||

|

|

sn−1 |

|

|

|

|

|

|

ctA |

|

− |

b ctA |

|

− |

· · · |

ctb D sn−1 |

|

|

||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

While the above result does indeed prove the existence of an observer which exactly reproduces the state given the output and the input, it su ers from repeatedly di erentiating the measured output, and in practice this produces undesirable noise. To circumvent these problems, in the next section we introduce an observer that does not exactly measure the state. Indeed, it is an asymptotic observer, meaning that the error e(t) = x(t) − xˆ(t) satisfies limt→∞ e(t) = 0.

22/10/2004 |

10.5 State estimation |

431 |

ct

Dyˆ(t)

y(t) |

|

|

|

|

− |

i(t) |

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

` |

|

|

internal |

|

xˆ |

(t) |

|

|

|

|

|

|

|

||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

u(t) |

|

|

|

|

|

|

|

|

|

model |

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

||||

Figure 10.6 |

The schematic for a Luenberger observer |

|

|

||||||||||||

10.5.2 Luenberger observers The error schematic in Figure 10.5 has the feature that |

|

|

it is driven using the error e(t). The asymptotic observer we construct in this section instead |

|

|

uses thetso-called innovation defined as i(t) = y(t)−yˆ(t) where yˆ(t) is the estimated output |

|

|

yˆ(t) = c xˆ(t) + Du(t). The schematic for the sort of observer is shown in Figure 10.6. Note |

|

|

that the inputs to the observer are the actual measured output y(t) and the actual input u(t), |

|

|

and that the output is the estimated state xˆ(t). The vector ` Rn we call the observer |

|

|

gain vector. There is a gap in the schematic, and that is the “internal model.” We use as |

internal model |

|

the internal model the original system model, but now with the inputs as specified in the |

principle? |

|

schematic. Thus we take the estimated state to satisfy the equation |

|

|

˙ |

|

|

xˆ(t) = Axˆ(t) + bu(t) + `i(t) |

|

|

yˆ(t) = ctxˆ(t) + Du(t) |

(10.19) |

|

i(t) = y(t) − yˆ(t). |

|

|

From this equation, the following lemma gives the error e(t) = x(t) − xˆ(t).

10.44 Lemma If Σ = (A, b, ct, D) is a SISO linear system and if xˆ(t), yˆ(t), and i(t) satisfy (10.19), then e(t) = xˆ(t) − x(t) satisfies the di erential equation

e˙ (t) = (A − `ct)e(t).

Proof Subtracting

˙

x˙ (t) = Ax(t) + bu(t), xˆ(t) = Axˆ(t) + bu(t) + `i(t),

and using the second and third of equations (10.19) we get

e˙ (t) = Ae(t) − `i(t)

=Ae(t) − `(y(t) − yˆ(t))

=Ae(t) − `ct(x(t) − xˆ(t))

=Ae(t) − `cte(t),

as desired. |

|

The lemma now tells us that we can make our Luenberger observer an asymptotic observer provided we choose ` so that A − `ct is Hurwitz. This is very much like the Ackermann pole placement problem, and indeed can be proved along similar lines, giving the following result.

432 |

10 Stabilisation and state estimation |

22/10/2004 |

10.45 Proposition Let (A, b, ct, D) be an observable SISO linear system and suppose that the characteristic polynomial for A is

PA(s) = sn + pn−1sn−1 + · · · + p1s + p0.

Let P R[s] be monic and degree n. The observer gain vector ` defined by

0

`= P (A)(O(A, c))−1 · · ·

0

1

has the property that the characteristic polynomial of the matrix A − `ct is P .

Proof Note that observability of (A, c) is equivalent to controllability of (At, c). Therefore, by Proposition 10.13 we know that if

|

t |

− c` |

t `t = 0 · · · 0 1 (C(At, c))−1P (At), |

(10.20) |

then the matrix A |

|

has characteristic polynomial P . The result now follows by ttakingt |

||

the transpose of equation t(10.20), and noting that the characteristic polynomial of A − c` |

||||

is equal to that of A − `c . |

|

|||

10.46 Remarks 1. As expected, the construction of the observer gain vector is accomplished along the same lines as the static state feedback vector as in Proposition 10.13. Indeed, the observer gain vector is obtained by using the formula of Proposition 10.13 with At in place of A, and with c in place of b. This is another example of the “duality” between controllability and observability.

2.The eigenvalues of A − `ct are called the observer poles for the given Luenberger observer.

3.The notion of an observer is lurking in the proof of Theorem 10.27. This is flushed out in Section 10.5.3.

4.We are, of course, interested in choosing the observer gain vector ` so that A − `ct is Hurwitz. This can be done if Σ is observable, or more generally, detectable. To this end,

let us denote by D(Σ) those observer gain vectors for which A − `ct is Hurwitz. |

|

|||||

Let us illustrate this with an example. |

|

|

|

|

|

|

10.47 Example We consider the SISO linear system Σ = (A, b, ct, D) with |

|

|||||

A = 0 |

−1 |

, |

c = |

0 . |

|

|

1 |

0 |

|

|

1 |

|

|

Suppose that we wish a closed-loop characteristic polynomial of P (s) = s2 + 4s + 4. |

We |

|||||

compute |

|

|

|

|

|

|

P (A) = A2 + 2A + 2I2 |

= |

1 |

−2 |

, O(A, c) = |

0 1 . |

|

Then we have |

2 |

1 |

|

1 0 |

|

|

|

|

0 |

1 |

|

|

|

|

|

|

|

|

||

` = P (A(O(A, c))−1 1 = 2 , |

|

|

||||

22/10/2004 |

10.5 State estimation |

433 |

|||

giving |

− |

|

1 |

−2 |

|

|

|

|

|||

A |

|

`ct = |

0 |

−2 , |

|

which has the desired characteristic polynomial.

Now let us see how the observer does at observing. In Figure 10.7 we show the results

|

2 |

|

|

|

|

|

2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

|

|

|

|

|

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 |

0 |

|

|

|

|

2 |

0 |

|

|

|

|

|

x |

|

|

|

|

xˆ |

|

|

|

|

|

||

|

-1 |

|

|

|

|

|

-1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

-2 |

|

|

|

|

|

-2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

-3 |

-2 |

-1 |

0 |

1 |

|

-3 |

-2 |

-1 |

0 |

1 |

|

|

|

|

xˆ1 |

|

|

|

|

|

x1 |

|

|

|

|

|

|

|

|

|

|

|

|

1.4 |

|

|

|

|

|

|

|

|

|

|

|

|

1.2 |

|

|

|

|

|

|

|

|

|

|

|

|

1 |

|

|

|

|

|

|

|

|

|

|

|

t)k |

0.8 |

|

|

|

|

|

|

|

|

|

|

|

e( |

|

|

|

|

|

|

|

|

|

|

|

|

k |

0.6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0.4 |

|

|

|

|

|

|

|

|

|

|

|

|

0.2 |

|

|

|

|

|

|

|

|

|

|

|

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

|

|

|

|

|

|

|

|

t |

|

|

|

|

|

|

Figure 10.7 The state (top left), the estimated state (top right), and the norm of the error for a Luenberger observer

of a simulation of equations (10.18) and (10.19) with |

|

b = (0, 1), D = 01, x(0) = (1, 1), xˆ(0) = 0, |

u(t) = 1(t). |

Note that the error is decaying to zero exponentially as expected. |

|

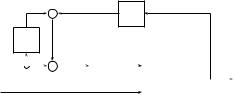

10.5.3 Static state feedback, Luenberger observers, and dynamic output feedback

In this section we bring together the ideas of static state feedback, Luenberger observers, and dynamic output feedback. It is by no means obvious that these should all be tied together, but indeed they are. To make the connection we make the obvious observation that if one does not possess accurate knowledge of the state, then static state feedback seems

434 |

10 Stabilisation and state estimation |

22/10/2004 |

a somewhat optimistic means of designing a controller. However, if one uses an observer to estimate the state, one can use the estimated state for static state feedback. The schematic is depicted in Figure 10.8. If the observer is a Luenberger observer satisfying (10.19) and if

r(t) |

|

|

u(t) |

|

plant |

|

|

y(t) |

− |

|

|

||||||

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

observer

observer

xˆ (t)

f t

Figure 10.8 Static state feedback using the estimated state

the plant is the usual SISO state representation satisfying (10.18), then one may verify that the equations governing the interconnection of Figure 10.8 are

x˙ (t) |

= |

A |

|

−bft |

t |

x(t) |

+ |

b |

r(t) |

xˆ˙ (t) |

|

`ct |

A − bf |

− `ct xˆ(t) |

|

b |

(10.21) |

||

y(t) = ct |

−Dft |

xˆ(t) |

+ Dr(t). |

|

|

|

|||

|

|

|

|

x(t) |

|

|

|

|

|

The next result records the characteristic polynomial and the closed-loop transfer function for these equations.

10.48 Theorem Let Σ = (A, b, ct, D) be a SISO linear system with f Rn a static state feedback vector and ` Rn an observer gain vector for a Luenberger observer (10.19). Suppose that the observer is combined with state feedback as in Figure 10.8, giving the closedloop equations (10.21). Also consider the interconnection of Figure 10.9 where

r(t) |

|

|

|

|

RC (s) |

|

|

RP (s) |

|

y(t) |

− |

|

|

||||||||

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

RO (s)

Figure 10.9 A dynamic output feedback loop giving the closedloop characteristic polynomial of static state feedback using a Luenberger observer to estimate the state