Jankowitcz D. - Easy Guide to Repertory Grids (2004)(en)

.pdf

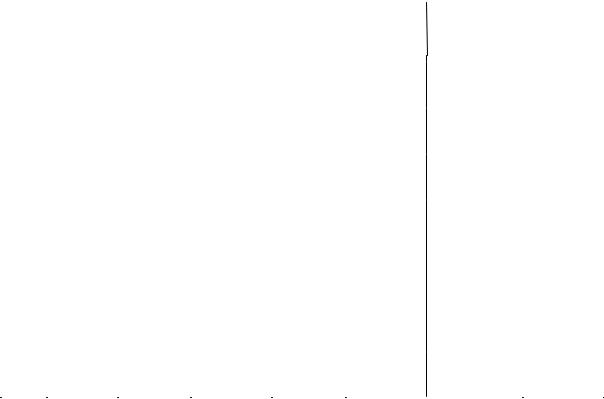

Table 7.4 Assessing reliability, step (4.3)

|

Collaborator |

1 |

2 |

3 |

4 |

|

5 |

6 |

7 |

8 |

|

|

|

Current |

Nature of |

Sales price |

|

Layout and |

Coverage |

Competition |

Trade |

Advertising |

|

Interviewer |

fashion |

purchasers |

|

|

design |

|

|

announcements |

budget |

||

|

|

|

|

|

|

|

|

|

|

|

|

1 |

Popularity of |

2.3, 5.8, |

|

1.4 |

|

|

|

|

3.2 |

|

|

|

topic |

3.5, 5.3 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 |

Buyer |

|

1.1, 2.5, |

|

4.6, 6.1 |

|

1.6, 6.4 |

6.6 |

|

|

|

|

characteristics |

|

3.7, 5.7 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3 |

Pricing decisions |

|

7.1 |

1.2, 3.1, |

7.3 |

|

6.2 |

|

|

7.2 |

|

|

4.4, 5.5, |

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

6.3, 7.7 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4 |

Design |

|

|

|

|

1.5, 4.5, |

|

|

|

|

|

|

|

|

|

|

|

7.5 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 |

Contents |

7.8 |

|

|

|

|

|

4.3, 5.9, |

3.6, 5.2 |

|

|

|

|

|

|

|

|

|

|

7.6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

6 |

Competitors |

|

|

7.9 |

|

|

|

|

2.1, 1.3, |

|

|

|

|

|

|

|

|

|

|

|

2.4, 3.3, |

|

|

|

|

|

|

|

|

|

|

|

4.2, 5.1, 6.5 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

7 |

Promotion |

|

|

7.4 |

|

|

|

|

|

1.7, 5.6, |

2.2, 4.1, |

|

|

|

|

|

|

|

|

|

|

6.7 |

3.4, 5.4 |

|

|

|

|

|

|

|

|

|

|

|

|

All of the constructs in the publisher’s example are shown here, identified by their code number.

Table 7.5 Assessing reliability, step (4.4)

|

Collaborator |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

Total |

|

|

Current |

Nature of |

Sales price |

Layout and |

Coverage |

Competition |

Trade |

Advertising |

|

Interviewer |

fashion |

purchasers |

|

design |

|

|

announcements |

budget |

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

Popularity of |

4 |

|

1 |

|

|

1 |

|

|

6 |

|

topic |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 |

Buyer |

|

4 |

|

2 |

2 |

1 |

|

|

9 |

|

characteristics |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3 |

Pricing |

|

1 |

6 |

1 |

1 |

|

|

1 |

10 |

|

decisions |

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4 |

Design |

|

|

|

3 |

|

|

|

|

3 |

|

|

|

|

|

|

|

|

|

|

|

5 |

Contents |

1 |

|

|

|

3 |

2 |

|

|

6 |

|

|

|

|

|

|

|

|

|

|

|

6 |

Competitors |

|

|

1 |

|

|

7 |

|

|

8 |

|

|

|

|

|

|

|

|

|

|

|

7 |

Promotion |

|

|

1 |

|

|

|

3 |

4 |

8 |

|

|

|

|

|

|

|

|

|

|

|

Total |

5 |

5 |

9 |

6 |

6 |

11 |

3 |

5 |

50 |

|

|

|

|

|

|

|

|

|

|

|

|

1.Index A: number of constructs along the diagonal for the categories agreed on, as a percentage of all the constructs in the table: 4 + 4 + 6 + 3 + 3 + 7 = 27;

50 constructs in total;

100 27/50 = 54%

2.Index B: number of constructs along the diagonal for the categories agreed on, as a percentage of all the constructs in the categories agreed on: 4 + 4 + 6 + 3 + 3 + 7 = 27;

42 constructs in the categories agreed on (5 + 5 + 9 + 6 + 6 + 11, or, of course, 6 + 9 + 10 + 3 + 6 + 8; it’s the same!) 100 27/42 = 64%

ANALYSING MORE THAN ONE GRID 161

But it’s still not good enough; a benchmark to aim at is 90% agreement, with no categories on whose definition you can’t agree. So:

(4.5) Negotiate over the meaning of the categories. Look at which categories in particular show disagreements, and try to arrive at a redefinition of the categories as indicated by the particular constructs on which you disagreed, so that you improve on the value of Indices A and B. Argue, debate, quarrel, just so long as you don’t come to blows. Break for lunch and come back to it if necessary!

For example, in Table 7.5, even without knowing what the constructs are, you can hazard a guess that the interviewer and collaborator will be able to agree on a single category, ‘promotion’, since announcements in the trade press, and advertising, might both be regarded as forms of promotion. This single redefinition would be sufficient to create a total set of seven categories which accounted for all the constructs and on which both were agreed.

Even if nothing else changed, a redrawing of Table 7.5 (see the result in Table 7.6) shows an improvement to 68% agreement. It is likely that this discussion will clarify the confusion which led to construct 7.2 being categorised under ‘pricing decisions’ by the interviewer, raising the index to 70%. Further discussion, concentrating on the areas of disagreement, would tighten up the definitions of the other categories. The aim is to get as many constructs onto the diagonal of the table as possible!

(4.6) Finalise a revised category system with acceptably high reliability. The only way of knowing whether this negotiation has borne fruit is for each of you, interviewer and collaborator, to repeat the procedure. Redo your initial coding tables, working independently. Can you both arrive at the same, including categorisation of the constructs to the carefully redefined categories?

Repeat the whole analysis again. That’s right! Repeat step 2 using these new categories. Repeat steps 3 and 4, including the casting of a new reliability table, and the recomputation of the reliability index.

This instruction isn’t, in fact, as cruel as it may seem. The categorisation activity is likely to be much quicker than before, since you will be clearer on category definitions and you will be using only agreed categories. It is still time-consuming, but there is no alternative if you care for the reliability of your analysis.

(4.7) Report the final reliability figure. The improved figure you’re aiming for is 90% agreement or better, and this is usually achievable.

There are more accurate measures of reliability, including ones which provide a reliability coefficient ranging between 1.0 and +1.0, which may be an obscure

Table 7.6 Assessing reliability, step (4.5)

|

Collaborator |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

Total |

||

|

|

Current |

Nature of |

|

Sales price |

Layout and |

Coverage |

|

Competition |

Promotion |

|

Interviewer |

fashion |

purchasers |

|

|

design |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

Popularity of |

4 |

|

1 |

|

|

1 |

|

6 |

||

|

topic |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 |

Buyer |

|

4 |

|

|

2 |

2 |

1 |

|

9 |

|

|

characteristics |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3 Pricing decisions |

|

1 |

|

6 |

1 |

1 |

|

|

1 |

10 |

|

|

|

|

|

|

|

|

|

|

|

|

|

4 |

Design |

|

|

|

|

3 |

|

|

|

|

3 |

|

|

|

|

|

|

|

|

|

|

|

|

5 |

Contents |

1 |

|

|

|

|

3 |

2 |

|

6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

6 |

Competitors |

|

|

1 |

|

|

|

7 |

|

8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

7 |

Promotion |

|

|

1 |

|

|

|

|

7 |

8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Total |

5 |

5 |

9 |

6 |

6 |

11 |

8 |

50 |

|||

|

|

|

|

|

|

|

|

|

|

|

|

1.The collaborator’s category no. 7, ‘promotion’, is the result of combining the previous two categories: 7, ‘trade announcements’ and 8, ‘advertising budget’.

2.Index A: number of constructs along the diagonal for the categories agreed on, as a percentage of all of the constructs in the table:

4 + 4 + 6 + 3 + 3 + 7 + 7 = 34 50 constructs in total 100 34/50 = 68%

3. (As all the categories are now agreed on, index A is identical to what was earlier called index B.)

ANALYSING MORE THAN ONE GRID 163

characteristic to anyone other than a psychologist or a statistician, who is used to assessing reliability in this particular way. Probably the most commonly used statistic in this context is Cohen’s Kappa (Cohen,1968). However, if having a standard errorof the figure you have computed matters to you, then the Perrault ^Leigh Index is the appropriate measure to use: see Perrault & Leigh (1989).

The value of Cohen’s Kappa or the Perrault ^Leigh Index which you would seek to achieve would be 0.80 or better. This is the standard statistical criterion for a reliable measure, but, if you’re conscientious about the way in which you negotiate common meanings forcategories, a highlyrespectable 0.90 istypical for repertorygrid content analyses.

And that’s that: with the completion of step 4 of our procedure, you’d continue with the remaining steps, 5 to 9, taking comfort that the categories devised in steps 5 to 9 were thoroughly reliable.

All of this seems very pedantic, and for day-to-day purposes, most people would skip the reanalysis of step 4.5. However, if you were doing all this as part of a formal research programme, especially one leading to a dissertation of any kind, you’d have to include this step, and report the improvement in reliability (it is conventional to report both the ‘before’ and the ‘after’ figure, by the way!).

Well and good; but haven’t you forgotten something? When you present the final results at steps 5 to 7 (the content-analysis table, with its subgroup columns fordifferential analysis as required), whose content-analysis table do you present: yours, or your collaborator’s? You’ve increased your reliability but, unless you’ve achieved a perfect 100% match, the two tables, yours and your collaborator’s, will differ slightly. Which should you use? Whose definition of reality shall prevail?

In fact, you should use your own (what we’ve been calling the interviewer’s contentanalysis table), rather than your collaborator’s.You designed the whole study and it’s probably fair for any residual inaccuracies to be based on your way of construing the study, rather than your collaborator’s. (Though if someone were to argue that you should spin a coin to decide,I could see an argument for it based on Kelly’s alternative constructivism: that one investigator’s understanding of the interviewees’constructs is as good as another’s, once the effort to minimise researcher idiosyncrasy has been made!)

Okay, this is a long chapter: take a break! And then, before you continue, please do Exercise 7.1.

164 THE EASY GUIDE TO REPERTORY GRIDS

7.2.2 A Design Example

I’d really like to set you an exercise with a realistically sized sample, with answers presented in Appendix 1, as I’ve done for the other procedures outlined in the previous chapters! However, it just isn’t possible to provide you with the data from 20 grids, and all the associated paraphernalia. (Exercise 7.1 will have to do. At least it focuses attention on what’s involved in reliability checking.) Hence the level of pedantic detail I’ve gone into in Section 7.2.1, to try to ensure that the procedure is readily understandable. The best way to learn a procedure is to do it, and when you do, you’ll find that everything falls into place.

Instead, let me provide you with a case example which addressed the same problem of how best to analyse a large number of grids, using a somewhat different approach and with slightly different design decisions being adopted. An examination of some slightly different answers adopted to the questions we’re addressing should help to establish the principles of what we’re doing. It’s worth examining in detail, as a further example of the sampling and design options involved in content analysis.

Watson et al. (1995) were interested in the tacit, as well as the more obvious, knowledge held by managers about entrepreneurial success and failure. This suggested the use of a personal construct approach, since constructs, being bipolar, are capable of saying something about both success and failure. (In fact, this issue was so important to the researchers that they took a design decision to work with two distinct sets of constructs, those dealing with successful entrepreneurship, and those dealing with unsuccessful entrepreneurship, doing a separate content analysis of each.)

Theirs was a large study. They identified 27 different categories for 570 constructs relating to success, and 20 categories for 346 constructs relating to failure, in a sample of 63 small-business owner-managers.

The top five categories assigned to successful entrepreneurship were ‘commitment to business’, ‘leadership qualities’, self-motivation’, ‘customer service’, and ‘business planning and organising’, between them accounting for 42% of the success-related constructs. The top five categories assigned to unsuccessful entrepreneurship were ‘ineffective planning and organisation’, ‘lack of commitment’, ‘poor ethics’, ‘poor money management’, and ‘lack of business knowledge and skills’, accounting for 32% of the constructs which related to business failure.

Other variants on the approach outlined in Section 7.2.1 above are as follows. Their constructs were obtained, not through grid interviews, but by asking their respondents to write short character sketches of the successful entrepreneur, and the unsuccessful entrepreneur, together with their

ANALYSING MORE THAN ONE GRID 165

circumstances, market, etc. The content analysis followed the classic Holsti approach towards the identification of content units and context units. Notice, since constructs had to be identified from connected narrative, rather than being separately elicited by repertory grid technique, there wasn’t a preexisting and obvious content unit. You’ll recall that grid-derived constructs provide you with a predefined unit of meaning. So, in that sense, their analysis was more difficult and much more time-consuming than the one I’ve outlined above.

Their categorisation procedure was carried out twice, on what were two distinct sets of data: the constructs about successful entrepreneurs, and the constructs about unsuccessful entrepreneurs. Thus, the differential analysis I have indicated as step 8 is, in their case, not an analysis, immediately amenable to statistical testing, of one single table divided up into groups of constructs, but a looser analysis ‘by inspection’ of differences between the two complete data sets. It’s clear, however (see Watson et al., 1995: 45), that the two solid and comprehensive sets of constructs constitute a database which is available for further analysis in a variety of ways. For example, they have carried out cluster analyses of both their data sets, although the details of the procedure they followed are not reported.

Their reliability check was a careful and detailed six-step procedure in which two independent raters:

. sorted constructs written onto cards into categories

. privately inspected and adjusted their category definitions

. negotiated over the meanings

. privately adjusted their category definitions again

. renegotiated

. finally agreed the definitive category set.

These categories were ‘approximately the same’ as a result, although no computation of a reliability index or coefficient was reported.

In Conclusion

If we stand back from the details of this generic bootstrapping technique for a moment, one characteristic, in particular, is worth noting. The generic technique as I’ve described it, and as applied in their own way by Watson et al. (1995), emphasises the meanings present in the constructs, but discards information about the ways in which interviewees use those constructs. There were no ratings available in Watson’s study since the constructs were obtained from written character sketches rather than from grids.

166 THE EASY GUIDE TO REPERTORY GRIDS

But where ratings are available, as in the procedure outlined in Section 7.2.1, it seems a shame not to use them to capture more about the personal meanings being aggregated in the analysis. What a pity to disregard the individual provenance which leads one person to rate an element ‘1’ on a given construct, and another person to rate the same element ‘5’ on a construct which the content analysis has demonstrated means the same to both people!

But how can this be done? It’s rather a tall order, you might argue. Though there’s an overlap in meaning for different interviewees, they don’t each use the same set of constructs, so how can one make use of the ratings in a regular and ordered way? Well, the content-analysis technique presented in Section 7.3 does just that. In the meanwhile, we need to consider a further generic approach.

At this point, you may feel you’d like to get away from my artificial examples, and case examples like the one above, and essay a content analysis of your own. Exercise 7.2 gives you the opportunity to do so.

7.2.3 Standard Category Schemes

Pre-existing, standard category schemes come from two alternative sources:

.they’re the result of a previous bootstrapping exercise by yourself or someone else; or

.they’re drawn up following a category system based on pre-existing research, theory, or assumptions which are felt to be relevant to the topic.

In either case, step 1 of the content-analysis procedure outlined in Section 7.2.1 has already been carried out for you. The categories already exist, and you use the scheme in your analysis from step 2 onwards. That is, you code, tabulate, and carry out a differential analysis as required. The reliability check of step 4 is probably unnecessary if the pre-existing categories are already known, from previous research, to provide a reliable way of summarising the topic in question, but you still need to be conscientious about the consistency with which you assign the constructs to the categories, and you might want to do the ‘second run-through’, as I indicated in step (4.6) above.

ANALYSING MORE THAN ONE GRID 167

Bootstrapped Schemes

After going to all the trouble involved in bootstrapping a category scheme, one’s inclined to put it to further use! Now that it’s pre-existing, as it were, it’s useful to put it to work in order to categorise new constructs should your circumstances require a fresh set of interviews. This would seem entirely appropriate if the topic remained the same from the first to the second occasion, and you were satisfied that the new interviewees were, as a sample, from the same population, so far as thinking about the topic is concerned. Nevertheless, you’d probably want to review the literature relevant to your topic area, to draw on some background theory to which you could appeal in establishing this.

Many of the personnel-psychology applications of grid technique (e.g., Jankowicz, 1990) depend on an initial job-analysis grid in which the different ways in which effective employees do their jobs are expressed in the form of constructs, and then categorised. This scheme can then be used to devise standard scales for use in the development of

. competency frameworks

. performance-appraisal questionnaires

. training needs analyses

. personal development need identification.

After a bootstrapping approach has been used to devise the original category scheme using the constructs of a group of employees, the categories might subsequently be applied to other groups of employees, if it is established that the jobs done by the original, and the new, groups of employees are similar. Section 7.3 provides further examples of applications in job analysis, and development of employee-appraisal questionnaires.

Theory-Based Schemes

Perhaps the best-known standard category system is Landfield’s (Landfield, 1971). Twenty-two distinct categories, ranging over such themes as ‘social interaction’, ‘organization’, ‘imagination’, ‘involvement’, and ‘humour’, are offered, together with a set of detailed guidelines to their use; and careful definitions of what is, and what isn’t, an example of each category are provided. While this system has been used mainly in clinical work (Harter et al., 2001, give a recent case example), it is sufficiently general to be helpful in a variety of personal and interpersonal situations.

More recently, Feixas et al. (2002) have developed a 45-category system divided into six overall themes, dealing with moral, emotional, relational,

168 THE EASY GUIDE TO REPERTORY GRIDS

personal, intellectual/operational, and value and interest-related constructs. Superficial constructs (which I assume would include some types of propositional construct: see Section 5.3.3), figurative constructs involving comparisons with particular other individuals, and constructs applicable to particular relationships have been excluded from the scheme. Great care has been taken to ensure the reliability of the scheme, and the level achieved, for what is a generic system, is comparable to the levels of reliability achievable with the more specific category systems obtained by bootstrapping (as outlined in the previous section). For those of a statistical inclination, a mean percentage agreement figure of 87.3%, corresponding to a Cohen’s Kappa of 0.95 on the six themes and 0.89 on the 45 categories, and to 0.96 and 0.93, respectively, using the Perrault–Leigh Index, are reported.

Viney has provided scales for assessing cognitive anxiety (broadly, uncertainty-related anxiety) by drawing on Kelly’s personal construct theory to revise earlier scales constructed in the psychoanalytic tradition (Viney & Westbrook, 1976).

Duck (1973) has devised a category scheme for assessing interpersonal relationships, based on a personality-role-interaction classification. Other schemes for categorising constructs commonly used in social settings are briefly described in Winter (1992: 31–32).

Combining Bootstrapping and Theory-Based Approaches

Finally, Hisrich & Jankowicz (1990) provide an example of a pilot study in which bootstrapping and the use of a pre-existing scheme were combined. It was known from previous theory and research that when they take an investment decision, venture capitalists pay attention to three main characteristics of the proposal: the managerial expertise in the company seeking funds (MacMillan et al., 1987), the market opportunity, and the cashout potential (Tyebjee & Bruno, 1984; MacMillan et al., 1987) for the whole venture. Hisrich & Jankowicz (1990) followed the bootstrapping procedure to arrive at nine categories classifying 45 constructs used by five venture capitalists. They found that these reflected three superordinate headings:

. ‘management’ (which fits into what is known about managerial expertise)

.‘unique opportunity’ (a match with what is known about the importance of market opportunity in the literature)

.‘appropriate return’, which, as its component constructs indicate, is synonymous with ‘cash-out potential’.

This was a small-scale pilot study, with only 45 constructs in total. However, as Table 7.7 shows, the value in doing a bootstrapping content analysis, rather