Understanding the Human Machine - A Primer for Bioengineering - Max E. Valentinuzzi

.pdf

338 |

Understanding the Human Machine |

lar physiopathological situation. We will introduce the frequency and the time domain concepts referring to specific literature for detailed descriptions. The concepts of discretization and algorithm will be also introduced. The student will take in due time courses devoted to this matter. Herein, we just want to offer an overview so that the newcomer knows where he/she stands and what to expect.

6.2. Pattern Reading

Karl Ludwig, in Leipzig, initiated the modern era of continuous records in physiology when he introduced the kymograph in 1847 (Geddes, 1970). It was a landmark, indeed, that changed the course of the physiological sciences. Mechanical and electrical events, especially those associated with the cardiovascular system, started a long road to dissecting out each of their respective components. Physiologists and physicians alike had to learn by sheer hands-on experience what is known as pattern reading (that is, to extract information from the shape of the tracing by visual inspection), perhaps the first step of the interpreter. Let us recall some specific examples while we recommend the student to review Chapters 2 and 3, especially sections dealing with signals.

To read the ECG, Wilhelm Einthoven, physiologist and physician (1903), with his string galvanometer as recording apparatus, and Thomas

1 mV

0.4 s |

Figure 6.1. MYOCARDIAL INFARCTION. It is marked by the appearance of Q waves, elevation or depression of the S-T segment and inversion of T waves. These records depict an anterior wall infarction after a few hours it took place. There is a clear and significant S-T shift. After an ECG course given by Dr. Carlos Vallbona, Department of Physiology, Baylor College of Medicine, Houston, Texas, 1968.

Chapter 6. The Interpreter: Reading the Signals |

339 |

Lewis (1911), with his classical treatise (The Mechanism of the Heart Beat), are to be considered founders and great teachers that patiently established criteria and rules still valid in clinical electrocardiology (Figure 2.31). Contributions of many others that followed improved knowledge and understanding of different aspects of this signal; say, an upward or downward deviation of the ST segment, depending on its extension, is associated with myocardial ischemia or infarct (Figure 6.1) while a long QRS and slurring of the trace are very likely related to bundle branch block and to desynchronization in the pumping action of both ventricles (Figure 6.2). A long PR interval speaks of atrioventricular partial block anticipating a probable second-degree block (Goldman, 1970).

Direct cannulation is required to record arterial blood pressure as a function of time (see Figures 2.14, 3.13 and 3.14). The pressure may range from 20 mm Hg to 320 mm Hg. The shape of the dicrotic notch, associated with the aortic valve closure, can show abnormalities (as insufficiency or stenosis) while large differential (or pulse) pressure is an indicator of arterial hardening or arteriosclerosis. A highly compliant aorta

1 mV

0.4 s |

Figure 6.2. BUNDLE BRANCH BLOCK. It occurs when there is an impediment to the conduction of the cardiac electrical impulse along one of the branches of the bundle of His. Prolonged QRS and slurring are two characteristics well depicted in the records shown above. Right bundle branch, while still having the same features as left bundle branch block (shown in these records), must be diagnosed by other added details present in different leads, especially precordial. After an ECG course given by Dr. Carlos Vallbona, Department of Physiology, Baylor College of Medicine, Houston, Texas, 1968.

340 |

Understanding the Human Machine |

(i.e., less stiff) will have a smaller pulse pressure for a given cardiac stroke volume. There are non-invasive techniques to record pulse pressure with the goal of better patient screening based on pattern changes. Thus, pulse pressure seems to be a potent and clinically useful predictor of the risk of cardiovascular disease in a variety of populations. Increasing pulse pressure is strongly associated with future coronary heart disease risk in middle-aged normotensives as well as hypertensives, in African Americans as well as in whites (see Klabunde’s website).

Other examples of biological signals include the electroencephalogram (EEG), the electromyogram (EMG), and heart sounds, all collected over the years essentially in analog form, i.e., signals defined over all time with infinite precision; they are continuous-time, continuous-amplitude recordings. Nowadays, there are digital databanks that store and update this physiological information and experimenters and practitioners are being trained with these modern tools while also using traditional philosophy (www.physionet.org).

6.3. Discretization of a Signal

Digital computers, as the name indicates, do not deal with continuous signals. The analog signal is converted to discrete numerical pieces, both in time and in amplitude. Hence, the recorded experimental or clinical trace is discretized. Often, the words digitalization or digitized are used as synonyms.

6.3.1. Horizontal Discretization: Sampling Time Interval

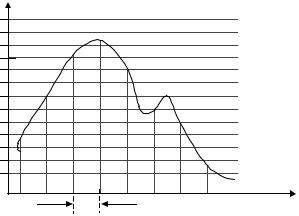

Referring to Figure 6.3, suppose a continuous blood pressure record is to be digitized. The first step consists of selecting a sampling interval ∆t to pick up amplitudes of the signal which correspond to instants 0, 1, 2, …, (n–1), n; since ∆t is kept constant, the time difference (Tn – Tn–1) = ∆t, always. Obviously, 1/∆t defines the sampling rate fs. Vertical bars in Figure 6.3 make up the sequence or collection of numbers that now represent the record. We could connect sequentially with straight segments the bar tops in an attempt to reconstruct the original trace; however, even though the first 5 points and the last 2 connected by 4 and 1 straight lines, respectively, essentially coincide with the record, the 5th, 6th and 7th bars miss the minimum A and the maximum B of the dicrotic

Chapter 6. The Interpreter: Reading the Signals |

341 |

m |

|

|

|

. |

|

|

|

. |

|

|

Amplitud |

. |

|

|

Q |

. |

|

B |

|

. |

|

|

|

. |

|

A |

|

. |

|

C |

|

. |

|

|

|

. |

|

|

|

2 |

|

|

|

1 |

|

|

Time |

|

|

|

|

|

|

∆t |

|

0 |

1 |

n–1 |

n |

Figure 6.3. CONCEPT OF SAMPLING. It means converting an analog signal to a dis- crete-time sequence of numbers. The trace above mimics a blood pressure record where A and B correspond, respectively, to the minimum and maximun of the dicrotic region.

notch. It appears clear that, roughly, just by eyeballing the figure, the sampling rate should have been at least twice as fast (meaning a shorter sampling interval) to capture all of the features of the signal.

In fact, the Nyquist or Sampling Theorem tells us that under ideal conditions we can successfully sample and reconstruct or play back frequency components up to one-half the sampling frequency. Thus, a sinusoidal signal (or component of a more complex signal, such as the dicrotic notch shown in Figure 6.3) can be correctly reconstructed from values sampled at discrete, uniform intervals as long as the highest signal frequency is less than half the sampling frequency. Any component of a sampled signal with a frequency above this limit, often referred to as the folding frequency, is subject to a distorting phenomenon named aliasing (alias, in Latin, refers to a fake individual or character; it does not really exist). In other words, frequency components less than the sampling frequency that do not exist are created by undersampling and are folded back into the true data. In practice sampling is usually done at least three times the maximum frequency that will appear in the data and a special low pass or anti-aliasing filter is used to guarantee aliasing will not occur.

342 |

Understanding the Human Machine |

Exercise: Obtain the limiting sampling rate. Find the maximum sampling period for a sinusoid of 100 Hz. Choose any musical CD and find its sampling frequency. Wich is the human audible frequency range? Does the CD digital music meet the sampling theorem requirement?

Aliasing can be explained easily and didactically in terms of a visual sampling system: movies. We all have watched a western where the wheel of a rolling wagon, desperately trying to get away from a bunch of mean outlaws, appears to be going backwards. This moving image is aliasing. The movie's frame rate undersamples the rotational frequency of the wheel, and our eyes are deceived by the misinformation. This also happens when we try to record and play back frequencies higher than one-half the sampling rate.

Consider a digital audio system with a sample rate of 50 kHz, recording a steadily rising sine wave tone. At lower frequency, the tone is sampled with many points per cycle. As the tone rises in frequency, the cycles get shorter and fewer and fewer points are available to describe each shorter cycle. At a frequency of 25 kHz, only two sample points are available per cycle; this is Nyquist sampling limit. In music, with its many frequencies and harmonics, aliased components mix with the real frequencies to yield a particular unpleasant distortion. If the pitch of the tone continues to rise, the number of samples per cycle is not adequate to describe the waveform, and the inadequate description is equivalent to one describing a lower frequency tone, and this is the alias or artificially created tone. In fact, the tone folds back into the data about the 25 kHz point. A 26 kHz tone sampled at 50 kHz becomes indistinguishable from a 24 kHz tone, a 30 kHz tone becomes a 20 kHz tone, which are obnoxious forms of distortion. And there is no way to undo the damage. That is why steps must be taken to avoid aliasing from the beginning (see http://www.earlevel.com/Digital%20Audio/Aliasing.html; for a demonstration of sinusoidal aliasing, see http://www.dsptutor.freeuk.com/aliasing/AD102.html).

6.3.2. Vertical Discretization: Amplitude Quantization

The signal amplitude of the trace in Figure 6.3 is continuous. The amplitude values of the sampled data are whatever value the trace has at the particular sampling instant. The full-scale amplitude range of the signal in volts must be divided in a finite number of equal amplitude steps, ∆q, to complete the discretization process. Thus, quantization is the conver-

Chapter 6. The Interpreter: Reading the Signals |

343 |

sion of discrete-time, continuous-amplitude signal to discrete-time, dis- crete-amplitude signal. The calibrated trace in pressure units is expressed in counts (so many ∆q steps), the number of which is determined by the number bits (or binary digits) used to encode the full-scale amplitude. The more bits (hardware) the smaller the ∆q step and the more accurate the amplitude representation. This process leads to a vertical resolution that, as with the horizontal time axis, may miss small variations of the signal. The relationship used to establish the vertical step ∆q is,

∆q = Q fs (2b −1) |

(6.1) |

where “b” stands for the number of bits and Qfs is the full scale signal amplitude at the sampling time, which for design calculation purpose is taken as the maximum expected value. For example, if only 3 bits are to be used and the expected maximun systolic pressure is fixed at, say, 240 mmHg, ∆q would be a voltage approximately equal to a pressure of 30 mmHg, obviously giving a rather coarse resolution. Three bits can code eight unique binary numbers (000, 001, 010, 011, 100, 101, 110, 111), or seven intervals, corresponding to eight pressures of (0, 30, 60, 90, 120, 150, 180, 210) mmHg levels. Observe that the last level of 240 mmHg is missed by the 3-bit code. Should we want that last level, we would have to calculate the vertical step using 320 mmHg as maximum, but in that case the resolution would worsen to 40 mmHg. Typically, analog to digital converters use a minimum of 8 bits for a resolution of one part in 255 leading to an accuracy of better than one percent. If the full scale voltage were 5 volts the system could resolve approximately 20 millivolts.

In short, the continuous signal recording space is covered with a grid formed by rectangles (∆q×∆t); only corners of them closest to the continuous trace are recorded by the discretization process and, eventually, the trace may cross one of such corners. Obviously, those corners correspond to the intersections of the pre-established vertical and horizontal lines, which are the boundaries of ∆t and ∆q.

Exercise: Take any of the records displayed in Chapters 2 and 3 and design an adequate discretization grid. Repeat with several examples of your own and discuss.

344 |

Understanding the Human Machine |

6.4. What Do We Do With the Signals?

Why did we bother to digitize the signal? We need a digital representation of the signal to be able to use computer software to help us interpret the signal with a view toward better understanding of the physiological process(es) by which the signal was created.

Well, here is the signal, discretized, stored in a computer memory, and ready as raw material to be processed in order to get into the interpretation stage. Physiological signals are naturally presented as graphic nonexplicit functions of time, that is, we never know the mathematical expression relating the event (action potential, blood pressure, substance concentration, or other) with the independent variable t. The discretization process introduced above is the beginning of a time domain analysis. Any other procedure applied to that collection of sequentially ordered numbers following a given mathematical rule (an algorithm), usually based on a mathematical model, either general or specific, enters into the actual analysis of that time course signal in order to search for significant information. Models, then, become an integral part of the analytical stage. Sometimes, they may be based on physical or physiological principles to set up the equations to start with or the latter can only be supported by empirical facts. However, in all cases, the experimentally recorded signal is the guiding light that keeps us tethered to the real live world. The physiological signal, however, is a complex event, many times periodic or quasi-periodic; at times, it shows itself as a more or less isolated burst or pulse (so increasing its complexity), as the case is with nerve or muscle action potentials or with some neuroendocrine hormones. As a consequence, frequency domain analysis, such as Fourier’s, teaches us that complex physiological signals may be decomposed into a series of simple sinusoids; such techniques are borrowed from the engineering sciences and permit breaking up of the ECG, EEG, EMG and the like. Mathematical models become also an integral part of this frequency domain analysis. In the end, both types of analysis should produce similar or equal results. This subject belongs to a more advanced course called Physiological Control Systems, where topics like linear and nonlinear modeling are dealt with.

In signal analysis the ingenuity, knowledge and experience of the interpreter are important. Classical methods, as convolution, Laplace trans-

Chapter 6. The Interpreter: Reading the Signals |

345 |

forms, and Fourier methods, are used to analyze and represent biomedical signals. Linear systems are represented by transfer functions providing the basis for system identification in the time and frequency domains. Important analytical techniques include the Fast Fourier Transform (FFT) and z-transform. Statistical procedures are also used, such as autocorrelation or cross-correlation. One of the main characteristics of real-life signals is their non-stationary, multicomponent nature and, many times, conventional methods fail to fully analyze these signals; investigators have used a joint time-frequency analysis method like the Wigner distributon to better reveal information contained in recordable biological signals.

Hence, one first and simple conclusion states that, to help in reading the signal, processing of biomedical signals refers to the application of signal processing methods to biomedical signals. It even sounds naïve and repetitious. A well-known sequence of processing steps for signal analysis is: (1) artifact rejection; (2) feature extraction; and (3) pattern recognition.

A second simple conclusion says that any and all possible processing algorithms may be used. In other words, do not self-inhibit if an apparent crazy idea for analysis suddenly pops up.

Obviously, good and sensible biomedical signal processing requires an understanding of the needs (e.g., biomedical and clinical requirements) and adequate selection and application of suitable methods to meet these needs. Within the rationale for biomedical signal processing, we should mention signal acquisition and signal processing to extract a priori desired information aiming at interpreting the nature of a physiological process based either on (a) observation of a signal (explorative nature), or (b) observation of how the process alters the characteristics of a signal (say, by monitoring a change of a predefined characteristic).

Goals for biomedical signal processing can be summarized as follows:

1.Quantification and compensation for the effects of measuring devices and noise on signal;

2.Identification and separation of desired and unwanted components of a signal;

3.Uncovering the nature of phenomena responsible for generating the signal on the basis of the analysis of the signal characteristics;

346 |

Understanding the Human Machine |

4.Eventually leading to modelling, but often more pragmatic than pure modelling.

5.Test the understanding one gains via signal analysis by trying to predict the effect on the signal of an experimental manipulation

Student task: Find the definition of algorithm. Dissect into its respective simple operations the algorithm of multiplication and the algorithm of división. Etymologically, the word derives from arabic.

Another area of interest into which signal processing can bring light is biomedical signal classification, based on signal characteristics, signal source, and applications. In this respect, signals may be deterministic (accurately described mathematically and rather predictable), periodic or almost periodic, transient, stationary (statistical properties do not change over time), stochastic (defined by their statistical distribution), ergodic (statistical properties may be computed along time distributions). Some people claim, for example, that all real biosignals may be considered stochastic, a helpful concept indeed when trying to model, for example, the EEG.

Student study subject: Dig a little deeper into the concepts of deterministic, stochastic and ergodic signals. Search for their essential characteristics. They have complex definitions, even if one tries simple versions. For example, “a phenomenon is stochastic (random) in nature if it obeys the laws of probability”, or else, “a stochastic process is a statistical process involving a number of random variables depending on a variable parameter (which is usually time)”. For ergodic you will run into definitions such as “an attribute of stochastic systems; generally, a system that tends in probability to a limiting form that is independent of the initial conditions”. However, when going into mathematical definitions, things become rather complex and should be better left for the specialist unless the student decides to put considerable effort and time in the subject. Related words are haphazardness, randomness and noise, all of them always associated with “lacking any predictable order or plan”.

6.5. Final Remarks and Conclusions

The biomedical signal must be read, must be understood and must be interpreted in its relationship with the pathophysiological event. This is the main message of the chapter. Noise, interference and/or artifacts have to be identified and removed; otherwise, we run the risk of attaching biological meaning to a non-existent event. Techniques and procedures to

Chapter 6. The Interpreter: Reading the Signals |

347 |

better read the signal, starting with simple visual inspection up to using the most sophisticated algorithm based perhaps on complex mathematical models and assumptions, belong to the area of signal processing. You are going to take courses devoted to signal processing and this chapter should offer you a platform with which to begin.

Feature extraction and pattern detection are still the beginning and main background of the interpreter. Thereafter, armed with his/her personal computer, the signal must be discretized, as explained above. Usually, this acquisition process is performed by analog-to-digital converters (A/D converters) to finally enter into the processing proper of the signal, as outlined in section 6.4 above. But remember, in the end interpretation is made only by the human being.

Among other points to mention about biological signals is the characteristic spontaneous and huge amount of variability in amplitude and frequency. The so-called physiological periodic signals are not periodic; at best they are quasi-periodic, for the ECG, blood pressure, and respiration, for example, change frequency and also amplitude wih time. Other physiological events are more variable and, in some cases, rather difficult to predict. Very likely, such variability contains information of potential clinical value. For that purpose, special numerical techniques have been developed and are constantly under research, most of them of the nonlinear type (Cerutti, 2002). This area offers attractive possibilities for the young investigator.

Ron S. Leder is a native of New York City. He obtained the PhD degree in biomedical engineering from Wisconsin University, Madison, WI, under the direction of Dr. John Webster. His has experience in data acquisition and signal processing where he contributed with several papers. Besides, he has been an active participant in the IEEE/EMBS. Currently, he is with the Department of Biomedical Engineering at the Universidad Autónoma Metropoli- tana-Iztapalapa in Mexico, DF.