Assembly Language Step by Step 1992

.pdfColumn 5 contains a 0; skip it.

Column 6 contains a 1. This column's value is 64; add 64 to the tally.

Column 7 contains a 1. This column's value is 27, or 128; add 128 to the tally. What do we have? 218. It's as easy as that.

Converting from decimal to binary, while more difficult, is done exactly the same way as converting from decimal to hex. Go back and read that section again, searching for the general method used. (You can also see section 1.8 for more information.) In other words, see what was done and separate the essential principles from any references to a specific base like hex.

I'll bet by now you can figure it out without much trouble.

As a brief aside, perhaps you noticed that I started counting columns from 0 rather than 1. A peculiarity of the computer field is that we always begin counting things from 0. Actually, that's unfair; the computer's method is the reasonable one, because 0 is a perfectly good number and should not be discriminated against. The rift occurred because in our world, counting things tells us how many things are there, while in the computer world counting things is more generally done to name them. That is, we need to deal with bit 0, and then bit 1, and so on, far more than we need to know how many bits there are. This is not a quibble, by the way. The issue will come up again and again in connection with memory addresses, which as I have said and will say again, are the key to understanding assembly language.

In programming circles, always begin counting from 0!

This is a good point to get some practice in converting numbers from binary to decimal

and back. Sharpen your teeth on these:

110 10001

11111011

101

1100010111010010

11000

1011

When that's done, convert these decimal values to binary:

77

42

106

255

18

6309

121

58

18,446

Why Binary?

If it takes eight whole digits (11011010) to represent an ordinary three-digit number like 218, binary as a number base would seem to be a bad intellectual investment. Certainly for us it would be a waste of mental bandwidth, and even aliens with only two fingers would probably have come up with a better system.

The problem is, lights are either on or they're off.

This is just another way of saying (as I'll discuss in detail in the next chapter) that at the bottom of it, computers are electrical devices. In an electrical device, voltage is either present or it isn't; current either flows or it doesn't. Very early in the game, computer scientists decided that the presence of a voltage in a computer circuit would indicate a 1 digit, while lack of a voltage at that same point in the circuit would indicate a 0 digit This is the only reason we use binary, but it's a pretty compelling one, and we're stuck with it. However, you will not necessarily drown in 1s and 0s, because I've already taught you a form of shorthand.

1.8 Hexadecimal as Shorthand for Binary

The number 218 expressed in binary is 11011010B. Expressed in hex, however, the same value is quite compact: DAH. The two hex digits comprising DAH merit a closer look. AH (or OAH as your assembler will require it for reasons I'll explain later) represents 10 decimal. Converting any number to binary simply involves detecting the powers of 2 within it. The largest power of 2 within 10 decimal is 8. Jot down 1 and subtract 8 from 10. What's left is 2. Even though 4 is a power of two, no 4's are hiding within 2. Write a 0 to the right of the 1. The next smallest power of 2 is 2, and there is a 2 in 2. Jot down another 1 to the right of the 0. Subtract 2 from 2 and you get 0, so there are no 1s left in the number. Jot down a final 0 to the right of the rest of the numbers to represent the 1s column. What you have is this:

1010

Look back at the binary equivalent of 218: 11011010. The last four digits are 1010. 1010 is the binary equivalent of OAH.

The same will work for the upper half of DAH. ODH, if you work out the binary

equivalence as we just did (and it would be good mental exercise), is 1101. Look at the |

||

binary equivalent of 218 this way: |

||

|

218 |

decimal |

1101 1010 |

binary |

|

D |

A |

hex |

It should be dawning on you that you can convert long strings of binary 1s and 0s into more compact hex format by converting every four binary digits (starting from the right, not from the left) into a single hex digit.

As an example, here is a 32-bit binary number that is not the least bit remarkable:

11110000000000001111101001101110

This is a pretty obnoxious collection of bits to remember or manipulate, so let's split it up into groups of four from the right:

1111 0000 0000 0000 1111 1010 0110 1110

Each of these groups of four binary digits can be represented by a single hexadecimal digit. Do the conversion now. What you should get is the following:

1111 0000 0000 0000 1111 1010 0110 1110

F |

0 |

0 |

0 |

F |

A |

6 |

E |

In other words, the hex equivalent of that mouthful is FOOOFA6E

In use, of course, you would append an H on the end, and also put a 0 at the beginning, so the number would actually be written OFOOOFA6EH.

This is still a good-sized number, but such 32-bit addresses are the largest quantities you will typically encounter in journeyman-level, assembly language-programming. Most hexadecimal numbers you will encounter are either four or two hex digits long instead. Furthermore, the PC's CPU likes to deal with 32-bit addresses 16 bits at a time, so most of the time you will look upon enormous hex numbers like OFOOFA6EH as the pair

OFOOH and OFA6EH.

Suddenly, this business starts looking a little more graspable.

Hexadecimal is the programmer's shorthand for the computer's binary numbers.

This is why I said earlier that computers use base 2 (binary) and base 16 (hexadecimal)

both at the same time in a rather schizoid fashion. What I didn't say is that the computer isn't really the schizoid one; you are. At their very heart (as I'll explain in the next chapter) computers use only binary. Hex is a means by which you and I make dealing with the computer easier. Fortunately, every four binary digits may be represented by a hex digit, so the correspondence is clean and comprehensible.

Prepare to Compute

Everything up to this point has been necessary groundwork. I've explained conceptually what computers do and given you the tools to understand the slightly alien numbers they use. But I've said nothing so far about what computers actually are, and it's well past time. We'll return to hexadecimal numbers again and again in this book; I've said nothing about hex multiplication or bit-banging. The reason is plain: Before you can bang a bit, you must know where the bits live. So let's lift the hood and see if we can catch a few in action.

Lifting The Hood

Discovering What Computers Actually Are

2.1RAXie, We Hardly Knew Ye... >• 42

2.2Switches, Transistors, and Memory >• 43

2.3The Shop Foreman and the Assembly Line >• 53

2.4The Box that Follows a Plan >• 58

2.1 RAXie, We Hardly Knew Ye...

In 1970, I was a senior in high school, and the Chicago Public Schools had installed a computer somewhere. A truckful of these fancy typewriter gimcracks was delivered to Lane Tech, and a bewildered math teacher was drafted into teaching computer science (they had the nerve to call it) to a high school full of rowdy males.

I figured it out fairly quickly. You pounded out a deck of these goofy computer cards on the card punch, dropped them into the hopper of one of the typewriter gimcracks, and watched in awe as the typewriter danced its little golfball over the greenbar paper, printing out your inevitable list of error messages. It was fun. I got straight As. I even kept the first program I ever wrote that did something useful: a little deck of cards that generated a table of parabolic correction factors for hand-figuring telescope mirrors.

The question that kept gnawing at me was exactly what sort of beast RAX (the computer's wonderfully appropriate name) actually was. What we had were ram-charged typewriters that RAX controlled over phone lines. But what was RAX?

I asked the instructor. In brief, the conversation went something like this:

ME: "Umm, sir, what exactly is RAX?"

HE: "Eh? Um, a computer. An electronic computer."

ME: "That's what it says on the course notes. But I want to know what RAX is made of and how it works."

HE: "Well, I'm sure RAX is all solid-state."

ME: "You mean, there's no levers and gears inside." HE: "Oh, there may be a few. But no radio tubes."

ME: "I wasn't worried about tubes. I suppose it has a calculator in it somewhere. But what makes it remember that A comes before B? How does it know what FORMAT means? How does it tell time? What does it have to do to dial the phone?"

HE: "Now, come on, that's why computers are so great! They put it all together so that we don't have to worry about that sort of thing! Who cares what RAX is? RAX knows FORTRAN, and will execute any correct FORTRAN program. That's what matters, isn't it?"

He was starting to sweat. So was I. End of conversation.

That June, I graduated with three inches of debugged and working FORTRAN punch cards in my bookbag, and still had absolutely no clue as to what RAX was.

It has bothered me to this day.

Gus to the Rescue

I was thinking about RAX six years later, while on the Devon Avenue bus heading for work, with the latest copy of Popular Electronics in my lap. The lead story involved a little thing called the COSMAC ELF, which consisted of a piece of perfboard full of integrated circuit chips, all wired together, plus some toggle switches and a pair of LED numeric displays.

It was a computer. (Said so right on the label.) The article told us how to put it together, and that was about all. What did those chips do? What did the whole thing do? It was driving me nuts.

As usual, my friend Gus Flassig got on the bus at Ashland Avenue and sat down beside me. I asked him what the damned thing did. He was the first human being to make the concept hang together for me:

"These are memory chips. You load numbers into the memory chips by flipping these switches in different code patterns. Each number means something to the CPU chip. One number makes it add; another number makes it subtract; another makes it write different numbers into memory, and lots of other things. A program consists of a bunch of these instruction numbers in a row in memory. The computer reads the first number, does what

the number instructs it to do, and then reads the second one, does what that number says to do, and so on until it runs out of numbers."

If you don't find that utterly clear; don't worry. I had the advantage of being an electronics hobbyist (so I knew what some of the chips did) and had already written some programs in RAX's FORTRAN. But for me, my God, everything suddenly hit critical mass and exploded in my head until the steam started pouring out of my ears.

No matter what RAX was, I knew that he had to be something like the COSMAC ELF on a larger scale. I built an ELF. It was quite an education, and allowed me to understand the nature of computers at a very deep level. I don't recommend that anybody but total crazies wirewrap their own machines out of loose chips anymore, although it was a common enough thing to do in the mid-to late Seventies. In this chapter, I'll try and provide you with some of the insights that I obtained while assembling my own machine the hard way. (You wonder where the "hard" in "hardware" comes from? Not from the sound it makes when you bang it on the table, promise...)

2.2 Switches, Transistors, and Memory

Switches remember.

Think about it. You flip the switch by the door, and the light in the middle the ceiling comes on. It stays on. When you leave the room, you flip the switch down again, and the light goes out. It stays out. Poltergeists notwithstanding, the switch will remain in the position you last left it until you (or someone else) come back and flip it to its other position.

In a sense, it remembers what its last command was until you change it, and "overwrite" that command with a new one. In this sense, a light switch represents a sort of rudimentary memory element.

Light switches are more mechanical than electrical, which does not prevent them from acting as memory; in fact, the very first computer (Babbage's 19th-century difference engine) was entirely mechanical. In fact, the far larger version he designed but never finished was to have been steam powered. Babbage's machine had lots of little cams that could be flipped by other cams from one position to another. Numbers were encoded and remembered as patterns of cam positions.

One if by Land

Whether a switch is mechanical, electrical, hydraulic, or something else is irrelevant.

What counts is that a switch contains a pattern: On or off; up or down, flow or no flow. To that pattern can be assigned a meaning. Paul Revere told his buddy to set up a code in the Old North Church: "One if by land, two if by sea." Once lit, the lamps in the steeple remained lit (and thus remembered that very important code) long enough for Paul to call out the militia and whup the British.

In general then, what we call "memory" is an aggregate of switches that will retain a pattern long enough for that pattern to be read and understood by a person or a mechanism. For our purposes, those switches will be electrical, but keep in mind that both mechanical and hydraulic computers have been proposed and built with varying degrees of success.

Memory consists of containers for alterable patterns that retain an entered pattern until someone or something alters the pattern.

Transistor Switches

One problem with building a computer memory system of light switches is that light switches are pretty specialized: they require fingers to set them, and their "output" is a current path for electricity. Ideally, a computer memory switch should be operated by the same force it controls to allow the patterns in memory locations to be passed on to other memory locations. In the gross electromechanical world, such a switch is called a "relay." A relay is a switch that is operated by electricity, and also controls electricity. You "flip" a relay by feeding it a pulse of electricity, which powers a little hammer that whaps a lever to one side or another. This lever then opens or closes a set of electrical contacts, just as your garden-variety light switch does. Computers have been made out of relays, although as you might imagine (with a typical relay being about the size of an ice-cube) they weren’t especially powerful computers.

Fully electronic computers are made out of transistor switches. Transistors are tiny crystals of silicon that use the peculiar electrical properties of silicon to act as switches. I won’t try to explain what these properties are, since that explanation would take an entire (fat) book unto itself. Let’s consider a transistor switch a sort of electrical ‘black box’ and describe it in terms of inputs and outputs.

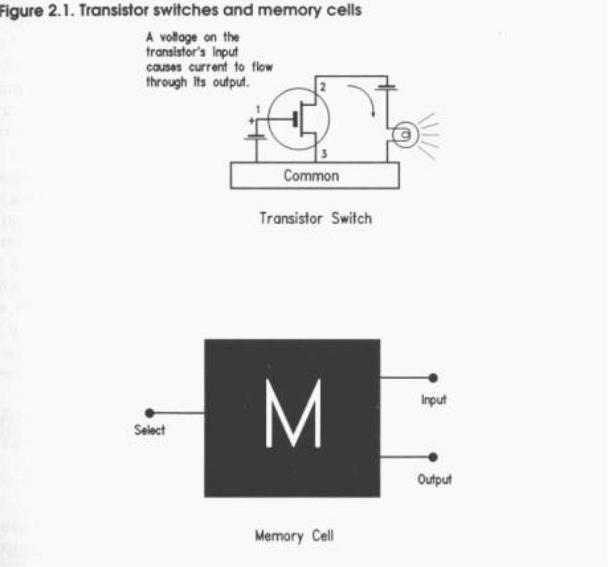

Figure 2.1 shows a transistor switch. When an electrical current is fed through pin 1, current ceases to flow between pins 2 and 3.

In real life, a tiny handful of other components (typically diodes and capacitors) are necessary to make things work smoothly in a computer memory context. These components are not necessarily little gizmos connected by wires to the outside of the

transistor (although in early transistorized computers they were), but are now cut from the same silicon crystal the transistor itself is cut from, and occupy almost no space at all. Taken together, the transistor switch and its support components are called a memory cell. I've hidden the electrical complexity of the memory cell within an appropriate black-box symbol in Figure 2.1.

A memory cell keeps current flow through it to a minimum, because electrical current flow produces heat, and heat is the enemy of electrical components. The memory cell's circuit is arranged so that if you put a tiny voltage on its input pin and a similar voltage on its select pin, a voltage will appear and remain on its output pin. That output voltage will remain in its set state until you take away the voltage from the cell as a whole, or remove the voltage from the input pin while putting a voltage on the select pin.

The "on" voltage being applied to all of these pins is kept at a consistent level. (Except, of course, when it is removed entirely.) In other words, you don't put 12 volts on the input

pin and then change that to 6 volts or 17 volts. The computer designers pick a voltage and stick with it. (Most memory cells operate at a constant 5 volts, although many operate at 12 volts.) The pattern is binary in nature: you either put a voltage on the input pin or you take away the voltage entirely. Likewise, the output pin either holds a fixed voltage or no voltage at all.

We apply a code to that state of affairs: the presence of voltage indicates a binary 1, and the lack of voltage indicates a binary 0. This code is arbitrary. We could as well have said that the lack of voltage indicates a binary 1 and vise versa (and computers have been built this way for obscure reasons) but the choice is up to us. Having the presence of something indicate a binary 1 is more natural, and that is the way things have evolved in the computing mainstream.

A single computer memory cell, such as the transistor-based one we're speaking of here, holds one binary digit, either a 1 or a 0. This is called a bit. A bit is the indivisible atom of information. There is no half-a-bit, and no bit-and-a-half. (This has been tried. It works badly. But that didn't stop it from being tried.)

A bit is a single binary digit, either 1 or 0.

The Incredible Shrinking Bit

One bit doesn't tell us much. To be useful, we need to bring a lot of memory cells together. Transistors started out small (the originals from the Fifties looked a lot like stove-pipe hats for tin soldiers) and went down from there. The first transistors were created from little chips of silicon crystal about an eighth of an inch square. The size of the crystal chip hasn't changed outrageously since then, but the transistors have shrunk incredibly.

In the beginning, one chip held one transistor. In time, the designers crisscrossed the chip into four equal areas, making each area an independent transistor. From there it was an easy jump to adding the other minuscule components needed to turn a transistor into a computer memory cell.

The silicon chip was a tiny and fragile thing, and was encased in an oblong molded plastic housing, like a stick of Dentyne gum with metal legs for the electrical connections.

What we had now was a sort of electrical egg carton: four little cubbyholes, each of which could contain a single binary bit. Then the shrinking process began: first 8 bits, then 16 bits, then multiples of 8 and 16, all on the same tiny silicon chip. By the late Sixties, 256 memory cells could be made on one chip of silicon, usually in an array of 8 cells by 32. In 1976, my COSMAC ELF computer contained two memory chips. On each chip was an