- •Biological and Medical Physics, Biomedical Engineering

- •Medical Image Processing

- •Preface

- •Contents

- •Contributors

- •1.1 Medical Image Processing

- •1.2 Techniques

- •1.3 Applications

- •1.4 The Contribution of This Book

- •References

- •2.1 Introduction

- •2.2 MATLAB and DIPimage

- •2.2.1 The Basics

- •2.2.2 Interactive Examination of an Image

- •2.2.3 Filtering and Measuring

- •2.2.4 Scripting

- •2.3 Cervical Cancer and the Pap Smear

- •2.4 An Interactive, Partial History of Automated Cervical Cytology

- •2.5 The Future of Automated Cytology

- •2.6 Conclusions

- •References

- •3.1 The Need for Seed-Driven Segmentation

- •3.1.1 Image Analysis and Computer Vision

- •3.1.2 Objects Are Semantically Consistent

- •3.1.3 A Separation of Powers

- •3.1.4 Desirable Properties of Seeded Segmentation Methods

- •3.2 A Review of Segmentation Techniques

- •3.2.1 Pixel Selection

- •3.2.2 Contour Tracking

- •3.2.3 Statistical Methods

- •3.2.4 Continuous Optimization Methods

- •3.2.4.1 Active Contours

- •3.2.4.2 Level Sets

- •3.2.4.3 Geodesic Active Contours

- •3.2.5 Graph-Based Methods

- •3.2.5.1 Graph Cuts

- •3.2.5.2 Random Walkers

- •3.2.5.3 Watershed

- •3.2.6 Generic Models for Segmentation

- •3.2.6.1 Continuous Models

- •3.2.6.2 Hierarchical Models

- •3.2.6.3 Combinations

- •3.3 A Unifying Framework for Discrete Seeded Segmentation

- •3.3.1 Discrete Optimization

- •3.3.2 A Unifying Framework

- •3.3.3 Power Watershed

- •3.4 Globally Optimum Continuous Segmentation Methods

- •3.4.1 Dealing with Noise and Artifacts

- •3.4.2 Globally Optimal Geodesic Active Contour

- •3.4.3 Maximal Continuous Flows and Total Variation

- •3.5 Comparison and Discussion

- •3.6 Conclusion and Future Work

- •References

- •4.1 Introduction

- •4.2 Deformable Models

- •4.2.1 Point-Based Snake

- •4.2.1.1 User Constraint Energy

- •4.2.1.2 Snake Optimization Method

- •4.2.2 Parametric Deformable Models

- •4.2.3 Geometric Deformable Models (Active Contours)

- •4.2.3.1 Curve Evolution

- •4.2.3.2 Level Set Concept

- •4.2.3.3 Geodesic Active Contour

- •4.2.3.4 Chan–Vese Deformable Model

- •4.3 Comparison of Deformable Models

- •4.4 Applications

- •4.4.1 Bone Surface Extraction from Ultrasound

- •4.4.2 Spinal Cord Segmentation

- •4.4.2.1 Spinal Cord Measurements

- •4.4.2.2 Segmentation Using Geodesic Active Contour

- •4.5 Conclusion

- •References

- •5.1 Introduction

- •5.2 Imaging Body Fat

- •5.3 Image Artifacts and Their Impact on Segmentation

- •5.3.1 Partial Volume Effect

- •5.3.2 Intensity Inhomogeneities

- •5.4 Overview of Segmentation Techniques Used to Isolate Fat

- •5.4.1 Thresholding

- •5.4.2 Selecting the Optimum Threshold

- •5.4.3 Gaussian Mixture Model

- •5.4.4 Region Growing

- •5.4.5 Adaptive Thresholding

- •5.4.6 Segmentation Using Overlapping Mosaics

- •5.6 Conclusions

- •References

- •6.1 Introduction

- •6.2 Clinical Context

- •6.3 Vessel Segmentation

- •6.3.1 Survey of Vessel Segmentation Methods

- •6.3.1.1 General Overview

- •6.3.1.2 Region-Growing Methods

- •6.3.1.3 Differential Analysis

- •6.3.1.4 Model-Based Filtering

- •6.3.1.5 Deformable Models

- •6.3.1.6 Statistical Approaches

- •6.3.1.7 Path Finding

- •6.3.1.8 Tracking Methods

- •6.3.1.9 Mathematical Morphology Methods

- •6.3.1.10 Hybrid Methods

- •6.4 Vessel Modeling

- •6.4.1 Motivation

- •6.4.1.1 Context

- •6.4.1.2 Usefulness

- •6.4.2 Deterministic Atlases

- •6.4.2.1 Pioneering Works

- •6.4.2.2 Graph-Based and Geometric Atlases

- •6.4.3 Statistical Atlases

- •6.4.3.1 Anatomical Variability Handling

- •6.4.3.2 Recent Works

- •References

- •7.1 Introduction

- •7.2 Linear Structure Detection Methods

- •7.3.1 CCM for Imaging Diabetic Peripheral Neuropathy

- •7.3.2 CCM Image Characteristics and Noise Artifacts

- •7.4.1 Foreground and Background Adaptive Models

- •7.4.2 Local Orientation and Parameter Estimation

- •7.4.3 Separation of Nerve Fiber and Background Responses

- •7.4.4 Postprocessing the Enhanced-Contrast Image

- •7.5 Quantitative Analysis and Evaluation of Linear Structure Detection Methods

- •7.5.1 Methodology of Evaluation

- •7.5.2 Database and Experiment Setup

- •7.5.3 Nerve Fiber Detection Comparison Results

- •7.5.4 Evaluation of Clinical Utility

- •7.6 Conclusion

- •References

- •8.1 Introduction

- •8.2 Methods

- •8.2.1 Linear Feature Detection by MDNMS

- •8.2.2 Check Intensities Within 1D Window

- •8.2.3 Finding Features Next to Each Other

- •8.2.4 Gap Linking for Linear Features

- •8.2.5 Quantifying Branching Structures

- •8.3 Linear Feature Detection on GPUs

- •8.3.1 Overview of GPUs and Execution Models

- •8.3.2 Linear Feature Detection Performance Analysis

- •8.3.3 Parallel MDNMS on GPUs

- •8.3.5 Results for GPU Linear Feature Detection

- •8.4.1 Architecture and Implementation

- •8.4.2 HCA-Vision Features

- •8.4.3 Linear Feature Detection and Analysis Results

- •8.5 Selected Applications

- •8.5.1 Neurite Tracing for Drug Discovery and Functional Genomics

- •8.5.2 Using Linear Features to Quantify Astrocyte Morphology

- •8.5.3 Separating Adjacent Bacteria Under Phase Contrast Microscopy

- •8.6 Perspectives and Conclusions

- •References

- •9.1 Introduction

- •9.2 Bone Imaging Modalities

- •9.2.1 X-Ray Projection Imaging

- •9.2.2 Computed Tomography

- •9.2.3 Magnetic Resonance Imaging

- •9.2.4 Ultrasound Imaging

- •9.3 Quantifying the Microarchitecture of Trabecular Bone

- •9.3.1 Bone Morphometric Quantities

- •9.3.2 Texture Analysis

- •9.3.3 Frequency-Domain Methods

- •9.3.4 Use of Fractal Dimension Estimators for Texture Analysis

- •9.3.4.1 Frequency-Domain Estimation of the Fractal Dimension

- •9.3.4.2 Lacunarity

- •9.3.4.3 Lacunarity Parameters

- •9.3.5 Computer Modeling of Biomechanical Properties

- •9.4 Trends in Imaging of Bone

- •References

- •10.1 Introduction

- •10.1.1 Adolescent Idiopathic Scoliosis

- •10.2 Imaging Modalities Used for Spinal Deformity Assessment

- •10.2.1 Current Clinical Practice: The Cobb Angle

- •10.2.2 An Alternative: The Ferguson Angle

- •10.3 Image Processing Methods

- •10.3.1 Previous Studies

- •10.3.2 Discrete and Continuum Functions for Spinal Curvature

- •10.3.3 Tortuosity

- •10.4 Assessment of Image Processing Methods

- •10.4.1 Patient Dataset and Image Processing

- •10.4.2 Results and Discussion

- •10.5 Summary

- •References

- •11.1 Introduction

- •11.2 Retinal Imaging

- •11.2.1 Features of a Retinal Image

- •11.2.2 The Reason for Automated Retinal Analysis

- •11.2.3 Acquisition of Retinal Images

- •11.3 Preprocessing of Retinal Images

- •11.4 Lesion Based Detection

- •11.4.1 Matched Filtering for Blood Vessel Segmentation

- •11.4.2 Morphological Operators in Retinal Imaging

- •11.5 Global Analysis of Retinal Vessel Patterns

- •11.6 Conclusion

- •References

- •12.1 Introduction

- •12.1.1 The Progression of Diabetic Retinopathy

- •12.2 Automated Detection of Diabetic Retinopathy

- •12.2.1 Automated Detection of Microaneurysms

- •12.3 Image Databases

- •12.4 Tortuosity

- •12.4.1 Tortuosity Metrics

- •12.5 Tracing Retinal Vessels

- •12.5.1 NeuronJ

- •12.5.2 Other Software Packages

- •12.6 Experimental Results and Discussion

- •12.7 Summary and Future Work

- •References

- •13.1 Introduction

- •13.2 Volumetric Image Visualization Methods

- •13.2.1 Multiplanar Reformation (2D slicing)

- •13.2.2 Surface-Based Rendering

- •13.2.3 Volumetric Rendering

- •13.3 Volume Rendering Principles

- •13.3.1 Optical Models

- •13.3.2 Color and Opacity Mapping

- •13.3.2.2 Transfer Function

- •13.3.3 Composition

- •13.3.4 Volume Illumination and Illustration

- •13.4 Software-Based Raycasting

- •13.4.1 Applications and Improvements

- •13.5 Splatting Algorithms

- •13.5.1 Performance Analysis

- •13.5.2 Applications and Improvements

- •13.6 Shell Rendering

- •13.6.1 Application and Improvements

- •13.7 Texture Mapping

- •13.7.1 Performance Analysis

- •13.7.2 Applications

- •13.7.3 Improvements

- •13.7.3.1 Shading Inclusion

- •13.7.3.2 Empty Space Skipping

- •13.8 Discussion and Outlook

- •References

- •14.1 Introduction

- •14.1.1 Magnetic Resonance Imaging

- •14.1.2 Compressed Sensing

- •14.1.3 The Role of Prior Knowledge

- •14.2 Sparsity in MRI Images

- •14.2.1 Characteristics of MR Images (Prior Knowledge)

- •14.2.2 Choice of Transform

- •14.2.3 Use of Data Ordering

- •14.3 Theory of Compressed Sensing

- •14.3.1 Data Acquisition

- •14.3.2 Signal Recovery

- •14.4 Progress in Sparse Sampling for MRI

- •14.4.1 Review of Results from the Literature

- •14.4.2 Results from Our Work

- •14.4.2.1 PECS

- •14.4.2.2 SENSECS

- •14.4.2.3 PECS Applied to CE-MRA

- •14.5 Prospects for Future Developments

- •References

- •15.1 Introduction

- •15.2 Acquisition of DT Images

- •15.2.1 Fundamentals of DTI

- •15.2.2 The Pulsed Field Gradient Spin Echo (PFGSE) Method

- •15.2.3 Diffusion Imaging Sequences

- •15.2.4 Example: Anisotropic Diffusion of Water in the Eye Lens

- •15.2.5 Data Acquisition

- •15.3 Digital Processing of DT Images

- •15.3.2 Diagonalization of the DT

- •15.3.3 Gradient Calibration Factors

- •15.3.4 Sorting Bias

- •15.3.5 Fractional Anisotropy

- •15.3.6 Other Anisotropy Metrics

- •15.4 Applications of DTI to Articular Cartilage

- •15.4.1 Bovine AC

- •15.4.2 Human AC

- •References

- •Index

38 |

C. Couprie et al. |

most cases is set to be a scalar functional with values in R+. In other words, the GAC equation finds the solution of:

argminC g(s)ds, |

(3.5) |

C |

|

where C is a closed contour or surface. This is the minimal closed path or minimal closed surface problem, i.e. finding the closed contour (or surface) with minimum weight defined by g. In addition to simplified understanding and improved consistency, (3.4) has the required form for Weickert’s PDE operator splitting [28, 68], allowed PDEs to be solved using separated semi-implicit schemes for improved efficiency. These advances made GAC a reference method for segmentation, which is now widely used and implemented in many software packages such as ITK. The GAC is an important interactive segmentation method due to the importance of initial contour placement, as with all level-sets methods. Constraints such as forbidden or attracting zones can all be set through the control of function g, which has an easy interpretation.

As an example, to attract the GAC towards zones of actual image contours, we could set

g ≡ |

1 |

, |

(3.6) |

1 + | I|p |

With p = 1 or 2. We see that for this function, g is small (costs little) for zones where the gradient is high. Many other functions, monotonically decreasing for increasing values for I, can be used instead. One point to note, is that GAC has a so-called shrinking bias, due to the fact that the globally optimal solution for (3.5) is simply the null contour (the energy is then zero). In practice, this can be avoided with balloon forces but the model is again non-geometric. Because GAC can only find a local optimum, this is not a serious problem, but this does mean that contours are biased towards smaller solutions.

3.2.5 Graph-Based Methods

The solution to (3.5) proposed in the previous section was in fact inspired by preexisting discrete solutions to the same problem. On computers, talking about continuous-form solutions is a bit of a misnomer. Only the mathematical formulation is continuous, the computations and the algorithms are all necessarily discrete to be computable. The idea behind discrete algorithm is to embrace this constraint and embed the discrete nature of numerical images in the formulation itself.

3.2.5.1 Graph Cuts

We consider an image as a graph Γ (V , E ) composed of n vertices V and m edges E . For instance, a 2D N × N 4-connected square grid image will have n = N2 vertices

3 Seeded Segmentation Methods for Medical Image Analysis |

39 |

and m = 2 × N × (N − 1) edges.1 We assume that both the edges and the vertices are weighted. The vertices will typically hold image pixel values and the edge values relate to the gradient between their corresponding adjacent pixels, but this is not necessary. We assume furthermore that a segmentation of the graph can be represented as a graph partition, i.e:

V = Vi; i =j,Vj ∩Vi = 0/. |

(3.7) |

Vi Γ |

|

Then E is the set of edges that are such that their corresponding vertices are in different partitions.

E = {e = {pi, p j} E, pi Vi; p j Vj, i =j}. |

(3.8) |

The set E is called the cut, and the cost of the cut is the sum of the edge weights

that belong to the cut: |

∑ we, |

|

C(E ) = |

(3.9) |

e E

where we is the weight of individual edge e. We assume these weights to be positive. Reinterpreting these weights as capacities, and specifying a set of vertices as connected to a source s and a distinct set connected to a sink t, the celebrated 1962 Ford and Fulkerson result [25] is the following:

Theorem 3.1. Let P be a path in Γ from s to t. A flow through that path is a quantity which is constrained by the minimum capacity along the path. The edges with this capacity are said to be saturated, i.e. the flow that goes through them is equal to their capacity. For a finite graph, there exists a maximum flow that can go through the whole graph Γ. This maximum flow saturates a set of edges Es. This set of edges define a cut between s and t, and this cut has minimal weight.

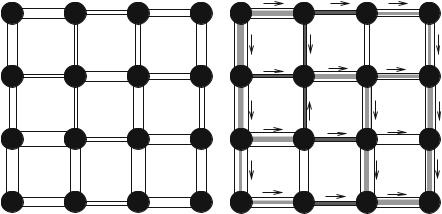

This theorem is illustrated in Fig. 3.2.

In 2D and if Γ is planar, this duality essentially says that the Ford and Fulkerson minimum cut can be interpreted as a shortest path in a suitable dual graph to Γ [2]. In arbitrary dimension, the maxflow – mincut duality allows us to compute discrete minimal hypersurfaces by optimizing a discrete version of (3.4).

There exist many algorithms that can be used to compute the maximum flow in a graph (also called network in this framework), but none with a linear complexity. Augmenting paths algorithms [7] are effective in 2D where the number of vertices is relatively high compared to the number of edges. In 3D and above, where the reverse is true, push-relabel algorithms [27] are more efficient. These algorithms can only be used when there is one source and one sink. The case where there are multiple sources or sinks is known to be NP-hard. To compute energies comprising several sources or sinks and leading to multi-label segmentation, approximations

1This particular computation is left as an exercise to the reader.

40 |

C. Couprie et al. |

a |

b |

S |

S |

t |

t |

Fig. 3.2 (a) A graph with edge weights interpreted as capacities, shown as varying diameters in this case. (b) A maximum flow on this graph. We see that the saturated vertices (in black) separate s from t, and they form a cut of minimum weight

can be used, such as α -expansions. These can be used to formulate and optimize complex discrete energies with MRF interpretations [8, 66], but the solution is only approximate. Under some conditions, the result is not necessarily a local minimum of the energy, but can be guaranteed not to be too far from the globally optimal energy (within a known factor, often 2).

In the last 10 years, GC methods have become extremely popular due to their ability to solve a large number of problems in computer vision, particularly in stereo-vision and image restoration. In image analysis, their ability to form a globally optimal binary partition with a geometric interpretation is very useful. However, GC do have some drawbacks. They are not easy to parallelize, they are not very efficient in 3D, they have a so-called shrinking bias, just as GAC and continuous maxflow have as well. In addition, they have a grid bias, meaning that they tend to find contours and surfaces that follow the principal directions of the underlying graph. This results in “blocky” artifacts, which may or may not be problematic.

Due to their relationship with sources and sinks, which can be seen as internal and external markers, as well as their ability to modify the weights in the graph to select or exclude zones, GC are at least as interactive as the continuous methods of previous sections.

3.2.5.2 Random Walkers

In order to correct some of the problems inherent to graph cuts, Grady introduced the Random Walker (RW) in 2004 [29,32]. We set ourselves in the same framework as in the Graph Cuts case with a weighted graph, but we consider from the start

3 Seeded Segmentation Methods for Medical Image Analysis |

41 |

a multilabel problem, and, without loss of generality, we assume that the edge weights are all normalized between 0 and 1. This way, they represent the probability that a random particle may cross a particular edge to move from a vertex to a neighboring one. Given a set of starting points on this graph for each label, the algorithm considers the probability for a particle moving freely and randomly on this weighted graph to reach any arbitrary unlabelled vertex in the graph before any other coming from the other labels. A vector of probabilities, one for each label, is therefore computed at each unlabeled vertex. The algorithm considers the computed probabilities at each vertex and assigns the label of the highest probability to that vertex.

Intuitively, if close to a label starting point the edge weights are close to 1, then its corresponding “random walker” will indeed walk around freely, and the probability to encounter it will be high. So the label is likely to spread unless some other labels are nearby. Conversely, if somewhere edge weights are low, then the RW will have trouble crossing these edges. To relate these observations to segmentation, let us assume that edge weights are high within objects and low near edge boundaries. Furthermore, suppose that a label starting point is set within an object of interest while some other labels are set outside of it. In this situation, the RW is likely to assign the same label to the entire object and no further, because it spreads quickly within the object but is essentially stopped a the boundary. Conversely, the RW spreads the other labels outside the object, which are also stopped at the boundary. Eventually, the whole image is labeled with the object of interest consistently labeled with a single value.

This process is similar in some way to classical segmentation procedures like seeded region growing [1], but has some interesting differentiating properties and characteristics. First, even though the RW explanation sounds stochastic, in reality the probability computations are deterministic. Indeed, there is a strong relationship between random walks on discrete graphs and various physical interpretations. For instance, if we equate an edge weight with an electrical resistance with the same value, thereby forming a resistance lattice, and if we set a starting label at 1 V and all the other labels to zero volt, then the probability of the RW to reach a particular vertex will be the same as its voltage calculated by the classical Kirchhoff’s laws on the resistance lattice [24]. The problem of computing these voltages or probability is also the same as solving the discrete Dirichlet problem for the Laplace equation, i.e. the equivalent of solving 2ϕ = 0 in the continuous domain with some suitable boundary conditions [38]. To solve the discrete version of this equation, discrete calculus can be used [33], which in this case boils down to inverting the graph Laplacian matrix. This is not too costly as it is large but very sparse. Typically calculating the RW is less costly and more easily parallelizable than GC, as it exploits the many advances realized in numerical analysis and linear algebra over the past few decades.

The RW method has some interesting properties with respect to segmentation. It is quite robust to noise and can cope well with weak boundaries (see Fig. 3.3). Remarkably, in spite of the RW being a purely discrete process, it exhibits no grid bias. This is due to the fact that level lines of the resistance distance (i.e. the